2014 NERSC User Survey Results

Executive Summary

Satisfaction with NERSC remained extremely high in all major categories in 2014, according to 673 users who responded to the 2014 NERSC user survey. The survey satisfaction scores mirrored those achieved in 2013, a year that saw record or near-record scores across the board. The 673 responses was a new high, surpassing the previous mark of 613 in 2013. Those users accounted for 72 percent of all computing hours used at NERSC in 2014.

The overall satisfaction rating of 6.50 on a 7-point scale equaled the best ever recorded and the same as in 2013. The average of all satisfaction scores was 6.33, also the same as the previous year. Only three of the 105 satisfaction questions showed a decrease compared to the previous year.

Survey Format

NERSC conducts its yearly survey of users to gather feedback on the quality of its services and computational resources. The survey helps both DOE and NERSC staff judge how well NERSC is meeting the needs of users and points to areas where NERSC can improve.

The survey is conducted on the web, and in 2014 consists of 105 satisfaction questions that are scored numerically. In addition, we solicit free-form feedback from the users. In December 2014, 5,000 authorized users (those with registered accounts who have signed the computer policy use form) were invited by email to take the 2014 user survey. The survey was open through January 19, 2015.

7-Point Survey Satisfaction Scale

The survey uses a seven-point rating scale, where “1” is “very dissatisfied” and “7” indicates “very satisfied.” For each question the average score and standard deviation are computed.

| Text Value | Numerical Value |

|---|---|

| Very Satisfied | 7 |

| Mostly Satisfied | 6 |

| Somewhat Satisfied | 5 |

| Neutral | 4 |

| Somewhat Dissatisfied | 3 |

| Mostly Dissatisfied | 2 |

| Very Dissatisfied | 1 |

3-Point Usefulness and Importance Scale

Questions that asked if a system or service was useful or important used the following scale.

| Text Value | Numerical Value |

|---|---|

| Very Useful (or Important) | 3 |

| Somewhat Useful (or Important) | 2 |

| Not Useful (or Important) | 5 |

Changes from one year to the next were considered significant if they passed the t-test criteria at the 90% confidence level.

Overall Satisfaction

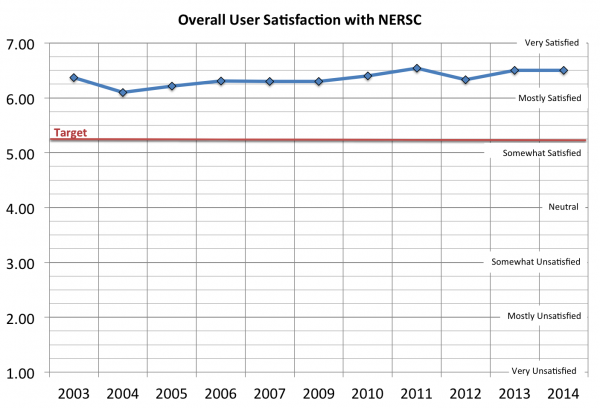

The average response to the item "Please rate your overall satisfaction with NERSC" was 6.50 on the seven-point satisfaction scale. This was the highest rating ever (to with statistical error) since the survey was created in its current form in 2003.

The following figure shows the overall satisfaction rating from 2003-2014. The red line labeled "Target" is the minimum acceptable DOE target for NERSC.

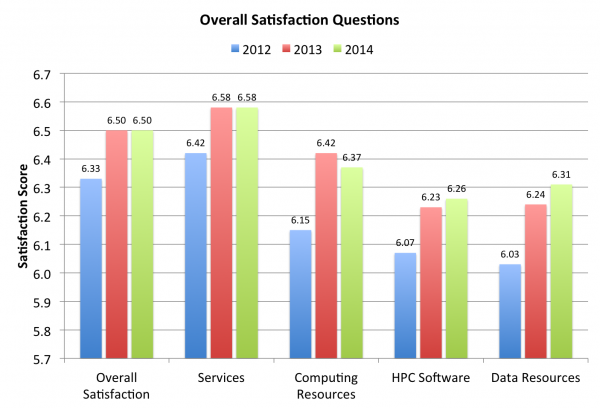

Overall Satisfaction Questions

Satisfaction was the same as in 2013 in all fives areas surveyed in the first section of the survey: "Overall Satisfaction." As with the "Overall Satisfaction with NERSC" score, none of the five area have ever received a statistically significant higher rating. (Results shown here include responses from all NERSC users – including those from JGI and PDSF users – for 2012, 2013, and 2014.

Questions Asked on the "Overall Satisfaction" Survey Page

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2013 to 2014 |

|---|---|---|---|---|

| NERSC Overall | 666 | 6.50 | 0.80 | - |

| Services | 640 | 6.58 | 0.78 | - |

| Computing Resources | 660 | 6.37 | 0.87 | - |

| Data Resources | 563 | 6.31 | 1.02 | - |

| HPC Software | 554 | 6.26 | 1.02 | - |

| Total for 5 Questions | 6.41 | 0.89 | - |

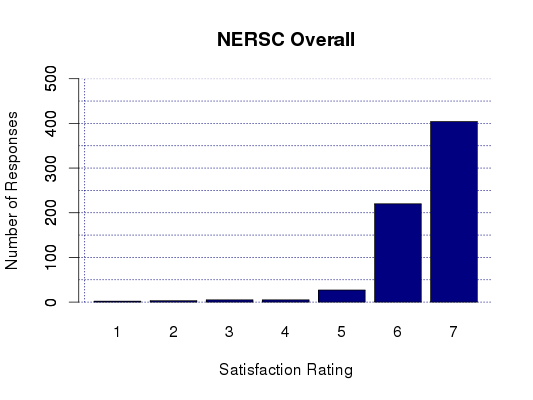

The most common rating in all five categories was "Very Satisfied (7)". The distribution for NERSC Overall is show below.

Other Overall Satisfaction Questions

Genepool596.170.81-

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2013 to 2014 |

|---|---|---|---|---|

| Security | 358 | 6.71 | 0.69 | - |

| Consulting | 479 | 6.64 | 0.80 | - |

| Account Support | 521 | 6.71 | 0.74 | +0.09 |

| PDSF | 51 | 6.43 | 1.14 | - |

| Project Global File System | 278 | 6.61 | 0.71 | - |

| HPSS | 295 | 6.45 | 0.89 | - |

| Web Site | 520 | 6.53 | 0.72 | - |

| Global Scratch File System | 367 | 6.53 | 0.84 | - |

| Hopper | 416 | 6.27 | 0.89 | -0.19 |

| Carver | 265 | 6.36 | 0.87 | - |

| Projectb File System | 108 | 6.42 | 0.86 | - |

| Edison* | 390 | 6.31 | 0.88 | - |

| NX | 135 | 6.10 | 1.15 | - |

Highest Rated Items

The 10 highest-rated items (of all 105 satisfaction questions) on the survey involved data storage systems (6 items), consulting and account support (2 items), and NERSC networking and cybersecurity (2). This tells us that users think NERSC takes good care of their data and makes it readily available, and provides excellent consulting, account support, networking, and cybersecurity.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2013 to 2014 |

|---|---|---|---|---|

| Project File System Data Integrity | 265 | 6.77 | 0.59 | +0.12 |

| Project File System Availability | 265 | 6.75 | 0.55 | - |

| Account Support | 521 | 6.71 | 0.74 | +0.09 |

| NERSC Cybersecurity | 443 | 6.71 | 0.65 | - |

| HPSS Data Integrity | 263 | 6.71 | 0.64 | - |

| HPSS Availability | 269 | 6.70 | 0.65 | - |

| Global Scratch Availability | 350 | 6.66 | 0.73 | - |

| Consulting Overall | 479 | 6.64 | 0.80 | - |

| Internal NERSC Network | 371 | 6.63 | 0.73 | - |

| Global Project File System Overall | 278 | 6.61 | 0.71 | - |

Lowest Rated Items

The 10 lowest-rated items (of all 105 satisfaction questions) on the survey involved the batch queues and the associated wait times (5), and data policies and analysis software (5). Batch wait times on Hopper and Edison were below the NERSC minimum target of 5.25, reflecting the strong demand for access to NERSC systems.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2013 to 2014 |

|---|---|---|---|---|

| Data Analysis Software | 258 | 5.98 | 1.26 | - |

| Hopper Batch Queue Structure | 397 | 5.97 | 1.11 | - |

| Long Team Data Retention | 277 | 5.96 | 1.27 | - |

| Workflow Software | 200 | 5.91 | 1.32 | - |

| Visualization Software | 200 | 5.88 | 1.23 | - |

| Edison Batch Queue Structure | 377 | 5.76 | 1.24 | - |

| Carver Batch Wait Time | 160 | 5.63 | 1.28 | - |

| Hopper Batch Wait Time | 404 | 5.17 | 1.52 | - |

| Edison Batch Wait Time | 383 | 4.87 | 1.56 | -0.50 |

Demographic Responses

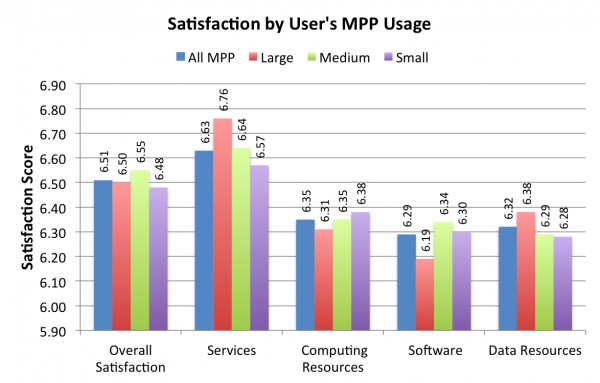

Large, Medium, and Small MPP Users

For purpose of survey analysis, users were divided into those who used more than 3 million MPP hours (Large), between 500,000 and 3 million MPP hours (Medium), and less than 500,000 MPP hours (Small). Overall, there was not a great difference among the groups, in contrast to the previous year when larger users expressed greater satisfaction with NERSC.

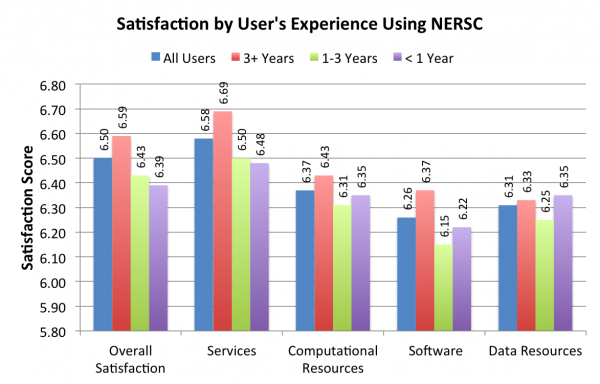

Satisfaction by NERSC Experience

Users that had been computing at NERSC reported more satisfaction in most areas, the exception being in data resources where new users were the happiest.

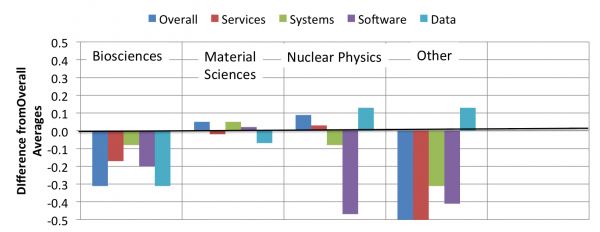

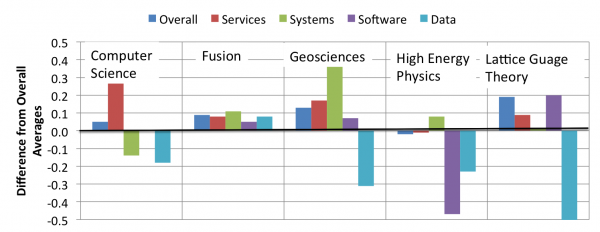

Scientific Domains

While users in all scientific domains rated NERSC highly, there were some differences among the groups. The plots below show differences from the average of all user responses. Researchers in accelerators, applied math, chemistry, geosciences, lattice gauge theory, and fusion research ranked NERSC higher than average, while scores were lower users in astrophysics and biosciences. An explanation for these variations are not immediately clear and warrant further investigation.

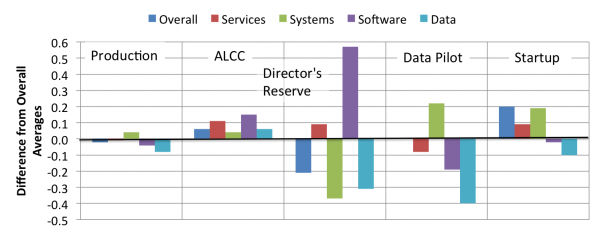

Satisfaction by Allocation Type

Projects receive allocations via a number of methods. "Production" accounts are allocated by DOE program mangers through the ERCAP allocations process, "ALCC" (ASCR Leadership Computing Challenge) accounts are allocated by DOE's Office of Advanced Scientific Computing, the NERSC Director has a reserve of time to allocate, NERSC awards small "Startup" accounts, and there was a "Data Pilot" program in 2013.

Production accounts make up the vast majority of accounts (and thus survey responses), so their responses define the average and that group shows little variation from it. ALCC and Startup users rated NERSC higher than average in all categories, while Director's Reserve and Data Pilot users had mixed responses.

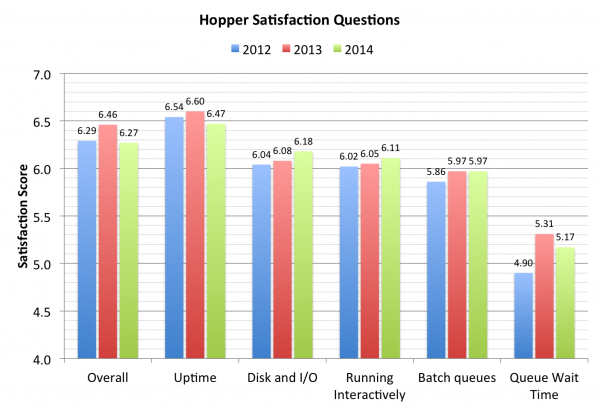

Hopper

Hopper has been a stable, productive system over the last three years and its satisfaction scores remained high, but down slightly from 2013 . The addition of the production Edison system in 2014 helped relieve some of the demand on Hopper, but the rating for queue wait times still fell to 5.17, but was still above the pre-Edison value of 4.90.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2013 to 2014 |

|---|---|---|---|---|

| Hopper Overall | 416 | 6.27 | 0.89 | -0.19 |

| Uptime | 405 | 6.47 | -0.19 | - |

| Disk Configuration and I/O | 385 | 6.18 | 1.07 | - |

| Ability to run interactively | 299 | 6.11 | 1.13 | - |

| Batch queue structure | 397 | 5.97 | 1.11 | - |

| Batch queue wait times | 404 | 5.17 | 1.52 | - |

| Total for 6 Questions | 6.02 | 1.08 |

Representative User Comments

" 1. Hopper and Edison were clearly oversubscribed during at least the third quarter of this last calendar year. Turnaround became unworkable for a number of projects. Only more hardware or smaller allocations can really fix this, although the situation has improved following queue adjustments. Given the planned machine move and periods with lower machine availability, some careful consideration should be given to queue structures (etc.) to minimize the crush in the second half of 2015. 2. Some (more) careful expectations management may be needed for Cori/KL. For many applications it might be a lot of work to get performance not significantly better than a similar era Xeon. (Energy usage should be much better, but the allocations are in hours, not joules.) "

" The queue time last year for hopper is quite long, compared to the years before 2013, as far as I remember. This seems odd as more computational resources such as Edison are also available. Maybe some of the queue policies can be further improved. "

" Small to medium jobs are sometimes on the queue for a few consecutive days - and this is extremely inconvenient! "

" Please provide more capabilities for high-throughput jobs. It is very difficult with many users competing for time in a single high-throughput queue (thruput on hopper), especially when small projects need to compete with larger collaborations, which already have their own dedicated resources. I would hope that if NERSC provides dedicated resources to these large collaborations, then the other computing environments would be accessible to smaller projects. Currently, this is not the case for the thruput queue, the only high throughput queue available at NERSC "

" Something needs to be done about the queue wait times on Hopper and Edison. I often have to wait 1 to 2 weeks for a job to start requesting 1000 - 5000 processors. "

" Decrease wait times for short to medium jobs on hopper. "

" Reduce the batch queue wait times on Hopper and Edison, perhaps by altering the priorities or lowering the limits on the run times of jobs (e.g., 12 hrs for jobs with more than 512 cores, etc). Every code should be able to checkpoint. Also, use a 'fair share' system so that users lose priority as they run more jobs. "

" The queue time is very important for me. Now it will take me several days to finish one job on Hopper, compared with the very short waiting time (less than 1 day) in early 2014. "

" Currently, the batch wait times on both Hopper and Edison are terrible. I wish this could be improved going forward in future. "

" I have had an extremely positive experience with NERSC. Edison and hopper have been indispensable for my research over the past 2 years. NERSC's consultants have been extremely helpful and responsive. "

" Excellent programming environment, excellent hardware, good queueing policies on hopper; the higi-priority debug queue is extremely useful in particular (not all supercomputing centers have them, regrettably) "

" I have been very impressed with the stability of the computing systems I have used (Hopper and Edison). Any issues I had were resolved very quickly and thoroughly. Planned outages of systems were communicated well in advance of when they would occur, allowing me to schedule my tasks appropriately. "

" It is very easy to install and run the simulation I need to. Considering I do not have no experience using super computer, it took just few hours to run my case in Hopper. "

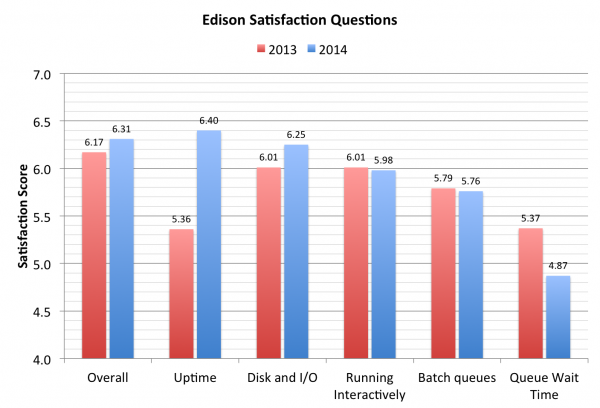

Edison

Edison was in preproduction in 2013. Users were not charged for usage, but the system was subject to frequent and unannounced downtimes. Users rated Edison overall higher than any of the other Edison categories. This was likely due to their overall satisfaction with the system, but unhappiness with frequest outages for testing and configuration. During this time, NERSC also experimented with a fair-share batch scheduler, for which many users expressed their dislike to NERSC consultants.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Edison Overall | 229 | 6.17 | 1.18 | N/A |

| Uptime | 223 | 5.36 | 1.53 | N/A |

| Disk Configuration and I/O | 202 | 6.01 | 1.33 | N/A |

| Ability to run interactively | 147 | 6.01 | 1.20 | N/A |

| Batch queue structure | 212 | 5.79 | 1.46 | N/A |

| Batch queue wait times | 223 | 5.36 | 1.63 | N/A |

| Total for 6 Questions | 5.77 | 1.40 |

Representative User Comments

"I just wanted to say that I'm really happy with the shift from Hopper to Edison. My code is MPI only and the limited memory/core on Hopper made it really difficult to run the problem sizes I needed to run. It's very difficult to commit the necessary 6 months required to rewrite one's code to target a single fleeting architecture choice. With Edison's increased memory/core, I'm now able to make forward progress on scientific problems for which I'd been fighting with this limitation on Hopper."

"I am not fond at all of the 'fairshare' variable in the batch scheduler on Edison."

"I have mixed feelings about the new 'fair share' policy on Edison."

"Notifications about downtime. Edison uptime in November + December was abysmal. it's very hard to run long-term jobs when uptime is limited to two days at most. Plus the downtimes were far in excess of the planned windows. I can't complain since Edison was free and in 'beta', but it did make work difficult, especially since Hopper ended up being oversubscribed."

NOTE: The fair share component to the scheduler is not being used in production on Edison in 2014.

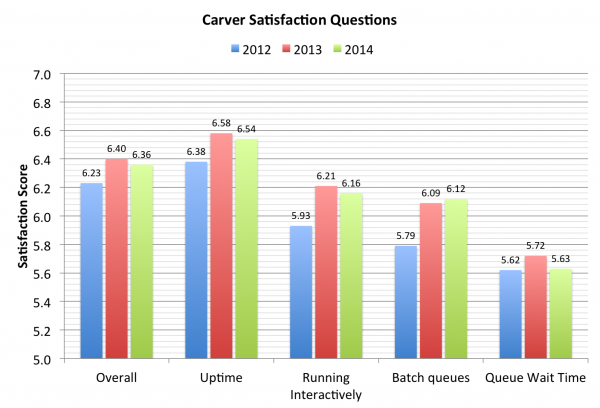

Carver

All satisfaction scores were up for Carver as it continued to be a reliable resource for users that like a standard LINUX computing environment.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Carver Overall | 184 | 6.40 | 0.92 | +0.20 |

| Uptime | 178 | 6.58 | 0.81 | - |

| Ability to run interactively | 141 | 6.21 | 1.13 | +0.28 |

| Batch queue structure | 163 | 6.09 | 1.26 | +0.30 |

| Batch queue wait times | 167 | 5.72 | 1.44 | - |

| Total for 5 Questions | 6.21 | 1.10 |

Representative user comments:

"NERSC needs to continue to expand its offerings for data-intensive high-throughput computing. Carver is currently the best NERSC general purpose resource for this and I am concerned that it is going away, leaving only more targeted offerings like PDSF, Genepool, and non-data-intensive HPC offerings like Edison and Hopper."

"I can access my data from carver even if hopper or edison is down."

"There are a few routines only available on Carver that I need, but my simulations are all ran on Hopper and Edison. Global scratch makes it easy to access my simulation results from Carver."

"Data analysis is convenient on Carver."

"My only experience is with Carver and it has been all positive. Jobs enter the queue quickly, maintenance is performed quickly and I can quickly move data to, from and around the environment."

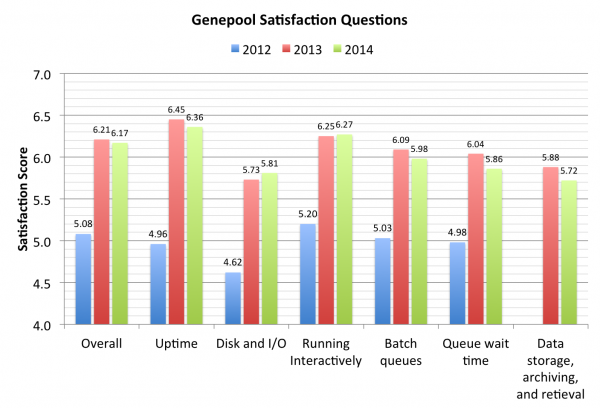

Genepool

Users of Genepool, a bioinfomatics cluster run by NERSC for the Joint Genome Institute, were far more satisfied in 2013 than in 2012. All satisfaction ratings increased by more than one full point. The improvement was expressed by one user, who wrote "I would like to thank the NERSC team for stabilizing both Genepool and our storage to a level that we can spend time thinking about Science rather than the infrastructure."

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Genepool Overall | 63 | 6.21 | 1.05 | +1.05 |

| Uptime | 62 | 6.45 | 0.69 | +1.49 |

| Ability to run interactively | 60 | 6.25 | 1.05 | +1.05 |

| Batch queue structure | 55 | 6.09 | 1.04 | +1.06 |

| Batch queue wait times | 57 | 6.04 | 0.91 | +1.06 |

| Filesystem configuration and I/O performance | 60 | 5.73 | 1.39 | +1.11 |

| Data storage, archiving, and retrieval | 60 | 5.88 | 1.33 | N/A |

| Total for 7 Questions | 6.10 | 1.02 |

Representative user comments:

"Very happy with hardware configurations, plenty of compute resources (on genepool) Filesystem performance is excellent especially considering the size and complexity of the configuration."

"I would like to thank the NERSC team for stabilizing both Genepool and our storage to a level that we can spend time thinking about Science rather than the infrastructure. Specifically, Douglas Jacobsen and Kjiersten Fagnan have put in a lot of effort to maintain the open communication so that users can get more involved and informed. It is also obvious that the team can now more proactively manage the system. Thank you and keep up the excellent work!"

"There's a high degree of inconsistency on Genepool - depending on whether you ssh or qlogin, and depending on which node you are on, various commands like 'ulimit' give varying and unpredictable output. This makes it incredibly difficult to develop, test, and deploy software in a way that will assure it can run on any node. Furthermore, forking and spawning processes is impossible to do safely when UGE may nondeterministically kill anything that temporarily exceeds the ulimit, even if it uses a trivial amount of physical memory. As a result, it is difficult to utilize system parallelism - if you know a set of processes will complete successfully when run serially, but UGE may kill them if you try any forking or piping, then the serial version has to go into production pipelines no matter how bad the performance may be. Also, Genepool's file systems are extremely unreliable. Sometimes projectb may yield 500MB/s, and sometimes it may take 30 seconds to read a 1kb file. Sometimes 'ls', 'cd', or 'tab'-autocomplete may just hang indefinitely. These low points where it becomes unusable for anywhere from a few minutes to several hours are crippling. Whether this is caused by a problem at NERSC or the actions of users at JGI, it needs to be resolved."

"Overall metadata servers seem to be a bottleneck for the disk systems I frequently use (global homes and /projectb). In particular, global homes becomes almost unusable at least once per day. For example, vim open and closing can take minutes which make using the system extremely frustrating. The queue configuration on genepool has changed substantially over the past 18 months and while it is substantially improved in terms of utilization, coherency and usability it is still not possible to simply submit a job to the cluster and expect it to run on appropriate resources. Determining qsub parameters needed to get a job to run require detailed knowledge of the hardware and cluster configuration which ideally would not be the case. I would like to be able to submit a job and have it run."

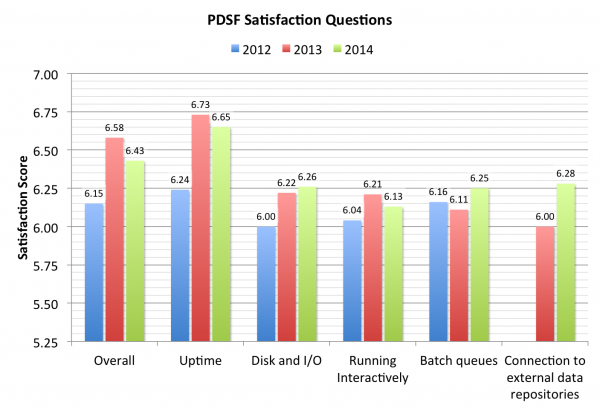

PDSF

Satisfaction scores for PDSF increased significantly in the areas of overall satisfaction and uptime. No areas showed a significant decrease.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| PDSF Overall | 33 | 6.58 | 0.66 | +0.43 |

| Uptime | 33 | 6.73 | 0.67 | +0.49 |

| Ability to run interactively | 32 | 6.22 | 1.24 | - |

| Batch queue structure | 35 | 6.11 | 1.51 | - |

| Filesystem configuration and I/O performance | 33 | 6.21 | 1.17 | - |

| Connection to external data repositories | 29 | 6.00 | 1.56 | N/A |

| Total for 6 Questions | 6.46 | 0.82 |

User Comments

I need more slots for jobs (nodes) on PDSF.

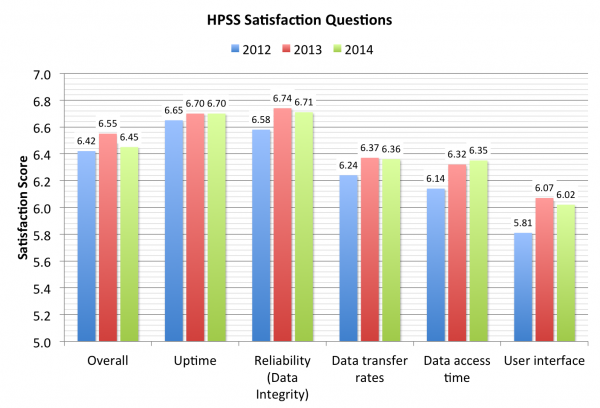

HPSS

Satisfaction scores for HPSS increased significantly in the areas of data reliability and user interface. No areas showed a significant decrease.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| HPSS Overall | 238 | 6.55 | 0.68 | - |

| Reliability (Data Integrity) | 227 | 6.74 | 0.59 | +0.16 |

| Availability (Uptime) | 231 | 6.70 | 0.56 | - |

| Data Transfer Rates | 230 | 6.37 | 0.96 | - |

| Data Access Time | 228 | 6.32 | 1.00 | - |

| User Interface | 221 | 6.07 | 1.13 | +0.26 |

| Total for 6 Questions | 6.46 | 0.82 |

Representative User Comments

"Currently HPSS is entirely local; any chance of remote backup services?"

HPSS SRU distribution across users should be controllable by project managers. It's annoying to track down individual users if their HPSS uploads suddenly saturated the entire repo's allowance. The project managers should be able to modify the % charged to each repo and even purge some data if it's taking up too much time.

"Archival HPSS is one of the things NERSC does well."

"HPSS is pretty good, though it still needs a programatic interface (e.g. python library) rather than just script interfaces."

"Back up and retrieval of data on the HPSS is fast."

"I was also impressed with the Globus Online interface to HPSS, tho originally I may have hit some bumps there."

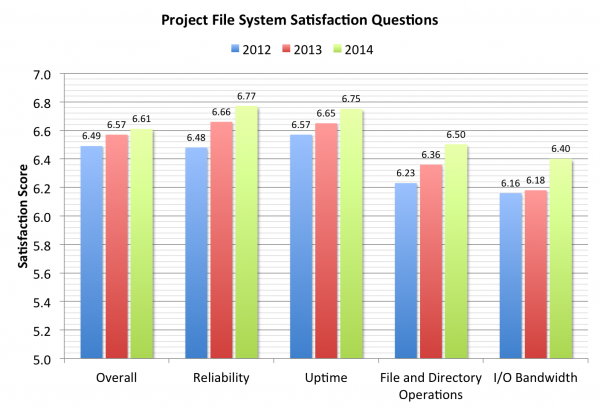

Project File System

Satisfaction scores for the Project file system improved significantly in system uptime. No areas showed a significant decrease.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Project Overall | 216 | 6.57 | 0.68 | - |

| Reliability (Data Integrity) | 206 | 6.65 | 0.75 | - |

| Availability (Uptime) | 206 | 6.65 | 0.67 | +0.18 |

| File and Directory Operations | 196 | 6.36 | 0.98 | - |

| I/O Bandwidth | 202 | 6.18 | 1.08 | - |

| Total for 5 Questions | 6.49 | 0.83 |

User Comments

"Not sure if it's possible, but some sort of disk space explorer for large projectdirs would be great. e.g. I currently run "du" to figure out what directories are taking up a lot of space. Would be nice to have a pie chart showing which root directories on my projectdirs are taking up a lot space, then click that root dir to see which subdir is offending. There are visual tools to show the overall usage of scratch, projectdirs, home, etc as well as tools to find what users are taking up space. But I don't know of a nice disk space finder."

"We need the equivalent of /project optimized for installing collaboration code. Collaboration production code shouldn't live in some postdoc's home directory, and /project/projectdirs/ is optimized for large streaming files, not for installing code and managing modules."

"Could we please get a shorter path to /project/projectdirs/NAME ? Like /proj/NAME maybe or even /p/NAME?"

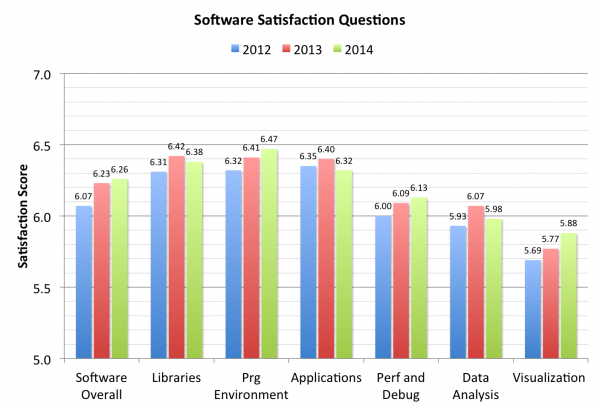

Software

Users liked NERSC's offering of software overall, especially programming libraries more in 2013 than in 2012. Other software satisfaction scores were unchanged.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Software Overall | 477 | 6.23 | 1.02 | +0.16 |

| Programming Libraries) | 448 | 6.42 | 0.80 | +0.11 |

| Programming Environment | 465 | 6.41 | 0.89 | - |

| Applications Software | 425 | 6.40 | 0.82 | - |

| Performance and Debugging Tools | 322 | 6.09 | 1.08 | - |

| Data Analysis Software | 240 | 6.07 | 1.06 | - |

| Visualiztion Software | 221 | 5.77 | 1.28 | - |

| Total for 6 Questions | 6.26 | 0.95 |

Representative User Comments

"What does NERSC do well? Compile software that I need."

"The module system is very good, offers a lot of good and up-to-date software."

"NERSC is extremely well organized. I am never surprised about, e.g., new software, removed deprecated software, uptime or downtime, or anything else."

"I LOVE the broad choice of compilers, rapid response to issues, and excellent documentation. NERSC also does a great job with versioning of software executables and libraries."

"Provides excellent and reliable HPC platforms and relevant software."

"NERSC provides a broad spectrum of software applications to its users."

"Excellent array of scientific libraries and software."

"Support is AMAZING. Systems are rock solid, and software "just works."

"Regards system software upgrades: I understand the need to do this, but always dread it as often my code(s) may have problems. While consultants are always great at overcoming those types of problems, it takes time and I may end up missing compute times for "target dates".

"I hate the module system! It's a huge pain in the neck to use for someone not familiar with it."

"I need a programming environment that is easier to use and get the user's own software running."

"I would like to have detailed information on each software compilation on the nersc website e.g. lammps."

"Archived changes to software, even when "small" changes are made to the version of something, e.g., 2.1.2 to 2.1.3. Small changes that "shouldn't" have an effect sometimes do. When they are not documented, the user is left trying to figure out what they are doing wrong, when the change is what is causing the problem."

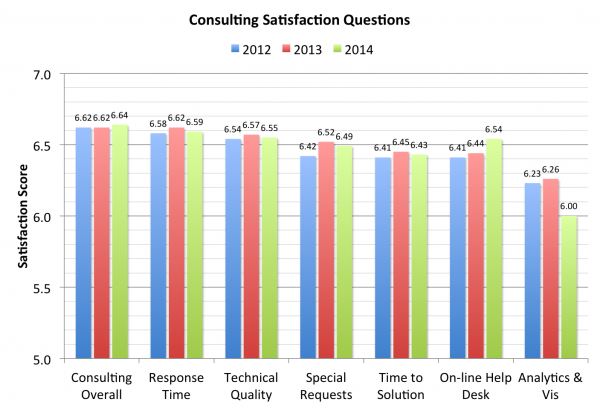

Consulting

Consulting satisfaction scores were high and unchanged from 2012.

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Consulting Overall | 402 | 6.62 | 0.85 | - |

| Response Time | 402 | 6.62 | 0.83 | - |

| Quality of Technical Advice | 398 | 6.57 | 0.87 | - |

| Response to Special Requests | 278 | 6.52 | 1.02 | - |

| Time to Solution | 396 | 6.45 | 0.95 | - |

| On-line Help Desk | 227 | 6.44 | 1.05 | - |

| Data Analysis and Visualization | 68 | 6.26 | 1.30 | - |

| Total for 7 Questions | 6.54 | 0.92 |

Representative User Comments

he consultants are amazingly responsive. I am thoroughly impressed by response times and general helpfulness.

The NERSC facility should be the standard that all computing facilities strive for. The communication, consultation, and reliability are the attributes that come to my mind first.

Attention to our needs by the consultants is excellent. They do an excellent job of helping solve our technical issues as well as providing alternate solutions when our needs run into conflict with NERSC security and other policies.

Nersc consultants are the BEST! Timeliness and quality of help is just great.

So far I'm most impressed by the website (excellent beginner documentation) the friendly and helpful atitude of the consultants, and the frequent appearance of improvements.

Computer systems work well, help desk and consultants are amazing (fast and always useful).

I really appreciate the consultants. Being able to ask and get quick answers to questions is worth a lot.

Consultants and their help are AWESOME.

NERSC does very well in providing highly competent technical consulting, and they are very prompt.

My one small gripe is that it sometimes takes 3-4 hours for a new ticket to be assigned to the consultant. If the issue is urgent, this delay is problematic. I have tried contacting consultants with my questions directly, with rather mixed results, so now I either enter a ticket and brace myself for a long wait or do not bother with a ticket and attempt to get my answers through other means.

In the past we have asked about setting up a separate filesystem for software (see NERSC Consulting incident number 40343). This question has lingered unanswered for months.

Accounts and Allocations

| Topic | Number of Responses | Average Rating | Standard Deviation | Statistically Significant Changes 2012 to 2013 |

|---|---|---|---|---|

| Account Support and Passwords | 461 | 6.62 | 0.85 | - |

| NIM Web Accounting Interface | 451 | 6.43 | 0.83 | - |

| Allocations Process | 386 | 6.38 | 1.03 | - |

Comments

I want to complain about NIM. The new process of adding users to a repo has some annoying bugs. The website can be non-responsive and I don't know if it actually did anything. Numerous times I had to send along a companion email to consult just to make sure my approval of a new user to a repo went in. One user emailed me they had been given the username of 0 (the digit zero)! Put this as a quote on a slide to the DOE program managers as a quote from one of your users: "It is ridiculous for the DOE's flagship scientific computing center to be stuck with such a primitive user management system as NIM. This system needs a serious overhaul or better yet, a replacement."

Communications

Users told us that email and status on the web a were communications methods that are the most useful to them.

| Topic | Number of Responses | Average Usefulness Rating (1-3) | Standard Deviation |

|---|---|---|---|

| 440 | 2.73 | 0.49 | |

| Center Status on Web | 409 | 2.70 | 0.53 |

| MOTD | 409 | 2.54 | 0.62 |

Are you adequately informed about NERSC changes?

Yes: 354 (95.7%), No: 16 (4.3%)

Training

NERSC training satisfaction scores were up considerably from 2012.

| Topic | Number of Responses | Usefulness Rating (1-3) | Satisfaction Rating (1-7) | Standard Deviation | Statistically Significant Change 2012-2013 |

|---|---|---|---|---|---|

| Web Tutorials | 199 | 2.59 | 6.49 | 0.85 | +0.24 |

| New Users Guide | 271 | 2.76 | 6.49 | 0.89 | - |

| Training Presentations on Web | 151 | 2.41 | 6.33 | 0.91 | +0.48 |

| Classes | 131 | 2.27 | 6.33 | 0.87 | +0.33 |

| Video Tutorials | 84 | 2.13 | 6.21 | 1.10 | +0.47 |

Web

NERSC's users liked the increasing emphasis on system status information on the web and the ability to communicate with NERSC using mobile devices.</>

| Topic | Number of Responses | Satisfaction Rating (1-7) | Standard Deviation | Statistically Significant Change 2012-2013 |

|---|---|---|---|---|

| Web Site Overall (www.nersc.gov) | 439 | 6.49 | 0.71 | - |

| System Status Info | 368 | 6.58 | 0.91 | +0.15 |

| Accuracy of Information | 395 | 6.52 | 0.76 | - |

| MyNERSC (my.nersc.gov) | 341 | 6.49 | 0.89 | - |

| Timeliness of Information | 395 | 6.39 | 0.90 | - |

| Ease of Navigation | 415 | 6.30 | 0.93 | - |

| Searching | 293 | 6.03 | 1.21 | - |

| Ease of Use From Mobile Devices | 71 | 5.99 | 1.39 | +0.46 |

| Mobile Web Site | 61 | 5.95 | 1.51 | +0.57 |

| Total for 9 Questions | 6.39 | 1.01 |

Comments

Not sure if it's possible, but some sort of disk space explorer for large projectdirs would be great. e.g. I currently run "du" to figure out what directories are taking up a lot of space. Would be nice to have a pie chart showing which root directories on my projectdirs are taking up a lot space, then click that root dir to see which subdir is offending. There are visual tools to show the overall usage of scratch, projectdirs, home, etc as well as tools to find what users are taking up space. But I don't know of a nice disk space finder.

Better search

Live status should be updated MUCH more frequently.

I have found it very useful to have slides from AIT/HPC meetings for reference on the Genepool Training and Tutorials page. However it would be nice to also have a few take-home points from the meeting on the site.

Navigation around the web site is easy for places I know, but to to find something new, that sometimes is difficult. It would help someone like me if there were an "index page", or "table of contents". I have a much easier time with that type of organization (eg, more words, alpha order, less image driven search).

Everything that is there is very useful to the user. The web page suits my needs PERFECTLY.

A page with a list of papers coming out of nersc computations would be very helpful.

My satisfaction level is extremely high, but: More technical information on designing parallel applications would be useful -- for instance, what kind of consistency guarantees do the file systems offer, or what kinds of interprocess communication are supported. Additionally, workflow management (eg. with Oozie) and web-based management of those workflows could be a useful feature -- SLAC has something similar with its pipeline infrastructure.

This is one of the most useful websites for getting started on a system. Thank you.

I would like to have more in-depth information of what is happening at NERSC available online. I can no longer participate in the NUGEX meetings and feel that I'm less informed than when I could actively participate. Maybe a transcript of the NUGEX conference calls would be useful.

The information that a person might need is almost always there. Sometimes finding that information can be tricky. For example, it seemed like I should have been able to find more quickly the format for acknowledging NERSC in manuscripts submitted for publication.

think "Queues and Scheduling Policies" page for clusters are most important, and then hope it could have a link at home page of clusters.

The software list needs to be updated somewhat. The list is comprehensive, but unfortunately some software requires custom execution environments (like the cluster compatibility modes) that should be better outlined and emphasized. Documentation for HSI and HTAR should be more extensive as well. But I am a power user, so I guess I look at the NERSC site as more of a reference than a tutorial.

Better searching.

It would be nice to have more useful system status information. Often the MOTD and website have not been updated.

Another complaint I have is timeliness in reporting about downtime. I understand that sometimes it may take a few minutes to realize that a system is down, but live status only allows a little bit of information out about what's going on. A little more openness from NERSC on its technical status would be great. How about a status blog we can be pointed to? I understand this can look bad for Cray (well tough for them) sometimes, but it really aggravates users that the updates on live status and MOTD are so terse. Let's have a kindler, gentler NERSC.

A cool thing would be a blog aggregating posts from various NERSC group leads and maybe some of the big users about what they're working on, what changes are coming to NERSC, etc. Might be more work but it could be distributed around...

If the "Queues and Policies" page for each system can be made more easily accessible on the NERSC web site, that would be helpful.

The website is extremely well put together.

I think the website is very well organized with lots of useful information.

So far I'm most impressed by the website (excellent beginner documentation) the friendly and helpful atitude of the consultants, and the frequent appearance of improvements.

A great website, great uptime--great notice of upcoming downtime.

Data Analytics and Visualization

| Topic | Number of Responses | Importance Rating (1-3) | Satisfaction Rating (1-7) | Standard Deviation | Statistically Significant Change 2012-2013 |

|---|---|---|---|---|---|

| Data Analytics and Visualization Assistance | 68 | 2.49 | 6.26 | 1.30 | - |

| Ability of perform data analysis | 141 | 2.76 | 6.26 | 1.05 | - |

| NERSC Databases | 58 | 2.47 | 6.21 | 1.06 | - |

| NERSC Science Gateways | 62 | 2.27 | 6.19 | 1.05 | - |

Where to you perform analysis and visualization of data produced at NERSC?

| All at NERSC | 45 | 9.4% |

| Most at NERSC | 85 | 17.7% |

| Half at NERSC | 94 | 19.6% |

| Most elsewhere | 118 | 24.6% |

| All elsewhere | 122 | 25.0% |

| I don't need data analysis or visualization | 18 | 3.8% |

Job Workflows

NERSC's users told us that it is important to them to be able to run both massively parallel jobs as well as ensembles of low ot medium-concurrency jobs.

Question: How important is it to you to be able to run the following types of jobs?

| Topic | Number of Responses | Average Importance Rating (1-3) | Standard Deviation |

|---|---|---|---|

| Massively parallel jobs | 418 | 2.67 | 0.56 |

| Ensembles of low to medium concurrency jobs | 380 | 2.57 | 0.66 |

| Interactive jobs | 368 | 2.20 | 0.80 |

| Serial jobs | 370 | 2.05 | 0.84 |

Data

Users top data needs are I/O bandwidth and the amount of storage space available for live access. Close behind is long-term data retention. The least important is analytics and visualization assistance from NERSC.

How important are each of the following to you?

| Topic | Number of Responses | Average Importance Rating (1-3) | Standard Deviation |

|---|---|---|---|

| I/O bandwidth to local disk | 384 | 2.69 | 0.56 |

| Storage space for real-time data access | 381 | 2.69 | 0.56 |

| Long-term data retention | 349 | 2.53 | 0.67 |

| Ability to checkpoint jobs | 343 | 2.50 | 0.68 |

| Archival storage space | 370 | 2.46 | 0.68 |

| Metadata performance | 329 | 2.21 | 0.73 |

| Large memory nodes for data analysis and vis | 248 | 2.18 | 0.79 |

| Data management tools | 225 | 2.16 | 0.72 |

| Data access over the web | 293 | 2.14 | 0.82 |

| Database access | 204 | 2.10 | 0.83 |

| NERSC help with data management plan | 223 | 2.07 | 0.77 |

| Science gateways (see portal.nersc.gov) | 183 | 2.05 | 0.76 |

| Analytics and visualization assistance | 211 | 1.98 | 0.80 |

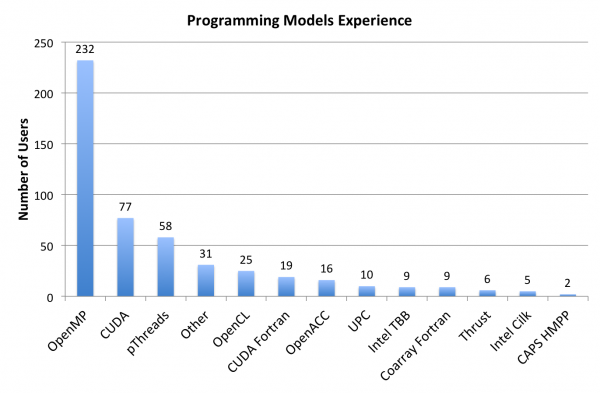

Programming Models

We asked users to let us know if they had experience with the programming models shown in the chart below. By far, users are most familiar with OpenMP. This will be important for programming on next-generation systems, where mixed MPI and OpenMP is expected to be the main programming model, with other languages and paradigs vying for acceptance.

Application Readiness

We asked users if about how ready their codes were for using GPU or Intel Phi processors. Their responses could be divided into the categories show in the table below.

What are your plans for transitioning to a many-core architecture like GPUs or Intel Phi? How much of your code can use vector units or processors?

Number of users responding: 110

| Have not started to transition | 65 |

| Investigating GPUs | 33 |

| Using GPUs | 14 |

| Investigating Phi | 15 |

| Using Phi | 4 |

How can NERSC help you prepare for manycore architectures?

Number of respondants: 67

| Training, tutorials, documentation | 28 |

| Host test and prototype systems. | 10 |

| Better profiling and optimization tools | 4 |

| Install optimized software | 3 |

| Provide coding manpower | 3 |

| Don't need help | 2 |

| Early announcement of future systems and programming models for them | 2 |

| Work with standards committees | 1 |

Comments

234 users responded to the following questions. Many responses fell into multiple categories, and can be broadly categorized as in the tables below. For the full text of all comments, see the "Full Comments" link in the navigation menu on the left side of this page.

What does NERSC do well?

| User support, good staff | 109 |

| Hardware, HPC resources | 107 |

| Well managed center, good in many ways, supports science | 58 |

| Uptime, reliability | 40 |

| Software support | 30 |

| Data, I/O, Networking | 24 |

| Batch structure, queue policies | 23 |

| Communication with users | 23 |

| Web, documentation | 21 |

| Security, ease of use, account management | 20 |

| Allocations | 14 |

| Training | 10 |

Does NERSC provide the full range of systems and services you need to meet your scientific goals? If not, what else do you need?

| Yes, or mostly yes | 125 |

| Data resources and services | 23 |

| Software requests | 18 |

| System feature requests | 17 |

| Queues and scheduling issues | 15 |

| Need for more cycles | 11 |

| Bad wait times | 9 |

| Need for testbeds | 5 |

| Need for training | 5 |

| Other requests | 4 |