Integrated Research Infrastructure

Integrated workflows are the future of science research, and DOE is investing in integrated research infrastructure (IRI) — the seamless connection of world-class experimental research tools and user facilities with HPC facilities to empower researchers and radically accelerate scientific discovery.

Six keys to IRI

-

Quality of service: Computation, storage, and networking capabilities enable quick response time and utilization.

-

Seamlessness: Tight integration of system components allows for high-performance workflows.

-

Programmability and automation: APIs manage data, execute code, and interact with system resources to enable automation and integration.

-

Orchestration: Resource management is coordinated across domains.

-

Portability: Modular workflows are executed across IRI sites.

-

Security: Authentication, authorization, and auditing are essential components in a more connected ecosystem.

NERSC has long been at the forefront of IRI, laying the groundwork with the Superfacility model and offering experienced leadership for implementation. The three-year Superfacility Project (2019–2022) kick-started this work, building the base infrastructure and services, including API-based automation, federated identity, real-time computing support, and container-based edge services via Spin. NERSC now supports multiple science teams using automated pipelines to analyze data from remote facilities at large scale without routine human intervention.

NERSC is preparing for a future in which integrated workflows are the norm by building systems with these workflows in their DNA. NERSC-10, NERSC-11, and the surrounding data center infrastructure are designed to accelerate end-to-end DOE Office of Science workflows and enable new modes of scientific discovery through the integration of simulation, data analysis, and experiment.

Featured IRI science supported by NERSC

Advanced Light Source

The Advanced Light Source (ALS) at Berkeley Lab and other upgraded accelerators produce brighter X-ray beams that support new science and faster experiments than ever before, and produce massive amounts of data. To help process the flow of information, ALS and NERSC have collaborated to produce powerful, intuitive computing tools; this integration of experimental science and HPC has changed science research from the deep earth to deep space.

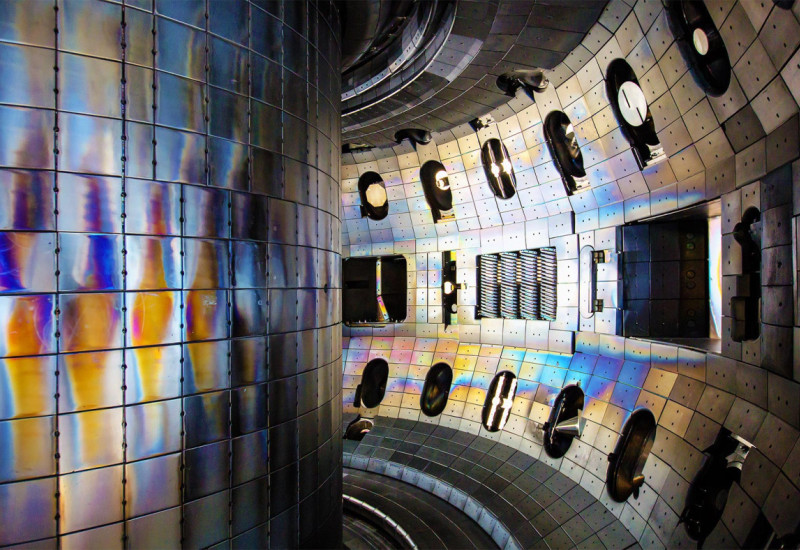

DIII-D National Fusion Facility

Widespread, emissions-free nuclear energy is the promise of nuclear fusion. A collaboration leveraging HPC at NERSC, high-speed data network via ESnet, and the DIII-D National Fusion Facility’s rich diagnostic suite makes data from fusion experiments more useful and available, helping to accelerate the realization of fusion energy production.

Linac Coherent Light Source

Massive amounts of data collected at the Linac Coherent Light Source (LCLS) at the Stanford Linear Accelerator (SLAC) require computing beyond the capacity of onsite computers. But automated workflows sending data to NERSC for analysis and back reduce the data analysis turnaround from days-weeks-months to seconds-minutes-hours.

Joint Genome Institute

Researchers in Berkeley Lab’s Applied Mathematics and Computational Research Division (AMCR) and at the Joint Genome Institute (JGI), with the help of NERSC supercomputers and support from the DOE Exabiome Exascale Computing Project, have developed new tools to advance the field of metagenomics and expand scientists’ understanding of our world’s biodiversity.

LUX-ZEPLIN Experiment

Located deep under the Black Hills of South Dakota in the Sanford Underground Research Facility (SURF), the uniquely sensitive LUX-ZEPLIN (LZ) dark matter detector helps investigate the mysterious form of matter that makes up most of our Universe. It relies on NERSC for data movement, processing, and storage to the tune of 1 PB of data annually. LZ also uses NERSC for simulation production, including custom workflow tools and science gateways that help collaborators organize their experiments and analyze and visualize notable detector events.