NERSC, Berkeley Lab Explore Frontiers of Deep Learning for Science

Computational Researchers Test Advanced Machine Learning Tools for HPC

December 8, 2015

By Kathy Kincade

Contact: cscomms@lbl.gov

Researchers in Berkeley Lab's Biological Systems and Engineering Division are using a deep learning library to analyze recordings of the human brain during speech production. Image: Kris Bouchard

Deep learning is not a new concept in academic circles or behind the scenes at “Big Data” companies like Google and Facebook, where algorithms for automated pattern recognition are a fundamental part of the infrastructure. But when it comes to applying these same tools to the extra-large scientific datasets that pass through the supercomputers at the National Energy Research Scientific Computing Center (NERSC) on a daily basis, it’s a different story.

Now a collaborative effort at Berkeley Lab is working to change this scenario by applying deep learning software tools developed for high performance computing environments to a number of “grand challenge” science problems running computations at NERSC and other supercomputing facilities.

“We are trying to assess if deep learning can be successfully applied to scientific datasets in climate studies, neutrino experiments, neuroscience and more,” said Prabhat, Data and Analytics Services Group Lead at Berkeley Lab. “Scientists routinely produce massive datasets with supercomputers and experimental and observational devices. The key challenge is how can we automatically mine this data for patterns, and deep learning does exactly that.”

Deep learning, a subset of machine learning, is the latest iteration of neural network approaches to machine learning problems. Machine learning algorithms enable a computer to analyze pointed examples in a given dataset and find patterns in those datasets, plus make predictions about what other patterns it might find.

Deep learning algorithms are designed to learn hierarchical, nonlinear combinations of input data. They get around the typical machine learning requirements of designing custom features and produce state-of-the-art performance for classification, regression and sequence prediction tasks. While the core concepts were developed over three decades ago, the availability of large data, coupled with datacenter hardware resources and recent algorithmic innovations, have enabled companies like Google, Baidu and Facebook to make advances in image search and speech recognition problems.

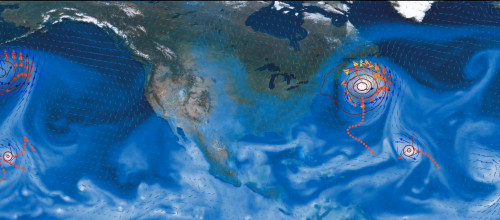

Deep learning tools are helping climate scientists better identify extreme weather events associated with climate change.

Surprisingly, deep learning has so far not made similar inroads in scientific data analysis, largely because the algorithms have not been designed to work on high-end supercomputing systems such as those found at NERSC.

“One of the challenges is to translate what we know in deep learning from speech, images and text to domains such as atmospheric simulations and astronomical sky surveys,” said Amir Khosrowshahi, chief technology officer and co-founder of Nervana, a startup providing deep learning as a cloud service. The company has been beta testing Neon, its open source deep learning library, at NERSC for the past year. “Domains where data can be mapped naturally to image or video analysis problems, as with atmospheric simulations, are often the easiest to approach with the latest deep learning algorithms.”

Three Case Studies

Take climate data analysis, for example. Modern climate simulations produce massive amounts of data, requiring sophisticated pattern recognition on terabyte- to petabyte-sized datasets to identify, say, extreme weather events associated with climate change. NERSC's Data and Analytics Services Group has been working with research groups at Berkeley Lab to test how Neon’s deep learning libraries might help streamline this process. And so far the results have been very positive.

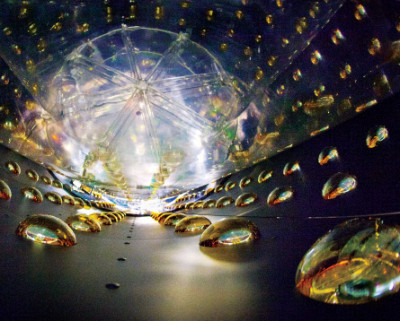

NERSC has implemented a deep learning data pipeline for the Daya Bay experiment, the first time unsupervised deep learning has been done in particle physics. Image: Roy Kaltschmidt

“We are finding that, in practice, we get state-of-the-art results using deep learning compared to other methods,” Prabhat said. “In climate simulation datasets, for example, we observe 95% accuracy in finding tropical cyclone patterns. We have had similar success with finding atmospheric rivers and weather fronts in satellite and reanalysis datasets.” Preliminary results from these investigations will be presented at the 2015 American Geophysical Union meeting, Dec. 14-18, in San Francisco.

The Daya Bay neutrino experiment is also testing how deep learning algorithms might enhance its data analysis efforts. Daya Bay is an international neutrino-oscillation experiment designed to determine the last unknown neutrino mixing angle θ13using anti-neutrinos produced by the Daya Bay and Ling Ao Nuclear Power Plant reactors. Data collection began in 2011 and continues today.

“Like all particle physics, Daya Bay acquires a huge amount of data, and while it uses quite sophisticated analysis, it still requires a lot of hand tuning and physics knowledge,” said Wahid Bhimji, data architect in NERSC’s Data and Analytics Services Group who oversaw the implementation of a deep learning pipeline for Daya Bay at NERSC this past summer. “The idea here is to try and use deep learning to automatically reduce the features in the data. We have found that we can pick out the interesting physics without human involvement." This is the first time unsupervised deep learning has been done in particle physics, he noted.

Kris Bouchard, a computational systems neuroscientist in Berkeley Lab’s Biological Systems and Engineering Division, has been working with NERSC, UC Berkeley and the University of California, San Francisco to apply a deep learning library called Theano to analyze recordings of the human brain during speech production.

“The fundamental problem we are trying to address is to decode or translate brain signals into the action being performed while the signal is recorded,” he said. “The traditional tools that have been applied for this purpose are standard machine learning approaches, which are likely ill-suited for the ‘deep’ structure of brain data.”

Deep learning gives them a more powerful and flexible method for recognizing patterns in brain signals, he added. “Using deep learning, our team has decoded produced speech syllables with 39% accuracy, which is 23 times greater than chance, 200% better than traditional algorithms on the same data and is the new state-of-the-art in the field,” Bouchard said. Results from this collaboration are being presented this week at the 2015 Neural Information Processing Systems Conference in Montreal.

“Emerging deep learning and machine learning workloads have the potential to transform how scientists and enterprises gain the insights needed to solve some of their greatest challenges,” said Charles Wuischpard, vice president Data Center Group and general manager of the HPC Platform Group at Intel. “These workloads require high performance computing and big data manipulation techniques to accelerate research and remove the model training bottleneck. We’re excited to be working with researchers at Berkeley Lab and NERSC to enable a whole new level of deep neural network training on Intel® Xeon® processor and Intel Xeon Phi TM processor-based systems to enable truly iterative research. We look forward to the countless discoveries to come."

“NERSC is taking a leadership role in exploring deep learning for science,” Prabhat said. “The MANTISSA project has produced state-of-the-art results in conventional object recognition tasks, as well as climate science, high-energy physics and neuroscience. Through collaborations with Nervana Systems, we have deployed software tools for the broader scientific community. And we are actively pursuing collaborations with Intel and UC Berkeley to deploy massively parallel, high performance implementations of deep learning libraries on the Cori platform. Deep learning has revolutionized commercial applications; we are very excited to bring such capabilities to simulation, experimental and observational datasets.”

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.