A Breakthrough in the Study of Laser/Plasma Interactions

A new PIC simulation tool brings advanced scalability to ultra-high-intensity physics simulations

April 22, 2019

By Kathy Kincade

contact: cscomms@lbl.gov

A new 3D particle-in-cell (PIC) simulation tool developed by researchers from Lawrence Berkeley National Laboratory and CEA Saclay is enabling cutting-edge simulations of laser/plasma coupling mechanisms that were previously out of reach of standard PIC codes used in plasma research. More detailed understanding of these mechanisms is critical to the development of ultra-compact particle accelerators and light sources that could solve long-standing challenges in medicine, industry, and fundamental science more efficiently and cost effectively.

In laser-plasma experiments such as those at the Berkeley Lab Laser Accelerator (BELLA) Center and at CEA/Saclay – an international research facility in France that is part of the French Atomic Energy Commission – very large electric fields within plasmas that accelerate particle beams to high energies over much shorter distances when compared to existing accelerator technologies. The long-term goal of these laser-plasma accelerators (LPAs) is to one day build colliders for high-energy research, but many spin offs are being developed already. For instance, LPAs can quickly deposit large amounts of energy into solid materials, creating dense plasmas and subjecting this matter to extreme temperatures and pressure. They also hold the potential for driving free-electron lasers that generate light pulses lasting just attoseconds. Such extremely short pulses could enable researchers to observe the interactions of molecules, atoms, and even subatomic particles on extremely short timescales.

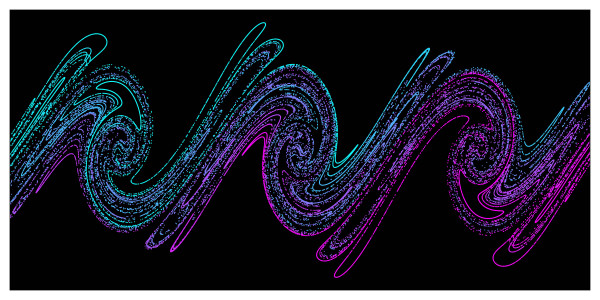

Large-scale simulations demonstrate that chaos is responsible for stochastic heating of dense plasma by intense laser energy. This image shows a snapshot of electron distribution phase space (position/momentum) from the dense plasma taken from PIC simulations, illustrating the so-called “stretching and folding” mechanism responsible for the emergence of chaos in physical systems. Image: G. Blaclard, CEA Saclay

Supercomputer simulations have become increasingly critical to this research, and Berkeley Lab’s National Energy Research Scientific Computing Center (NERSC) has become an important resource in this effort. By giving researchers access to physical observables such as particle orbits and radiated fields that are hard to get in experiments at extremely small time and length scales, PIC simulations have played a major role in understanding, modeling, and guiding high-intensity physics experiments. But a lack of PIC codes that have enough computational accuracy to model laser-matter interaction at ultra-high intensities has hindered the development of novel particle and light sources produced by this interaction.

This challenge led the Berkeley Lab/CEA Saclay team to develop their new simulation tool, dubbed Warp+PXR, an effort started during the first round of the NERSC Exascale Science Applications Program (NESAP). The code combines the widely used 3D PIC code Warp with the high-performance library PICSAR co-developed by Berkeley Lab and CEA Saclay. It also leverages a new type of massively parallel pseudo-spectral solver co-developed by Berkeley Lab and CEA Saclay that dramatically improves the accuracy of the simulations compared to the solvers typically used in plasma research.

In fact, without this new, highly scalable solver, “the simulations we are now doing would not be possible,” said Jean-Luc Vay, a senior physicist at Berkeley Lab who heads the Accelerator Modeling Program in the Lab’s Applied Physics and Accelerator Technologies Division. “As our team showed in a previous study, this new FFT spectral solver enables much higher precision than can be done with finite difference time domain (FDTD) solvers, so we were able to reach some parameter spaces that would not have been accessible with standard FDTD solvers.” This new type of spectral solver is also at the heart of the next-generation PIC algorithm with adaptive mesh refinement that Vay and colleagues are developing in the new Warp-X code as part of the U.S. Department of Energy’s Exascale Computing Project.

2D and 3D Simulations Both Critical

Vay is also co-author on a paper published March 21 in Physical Review X that reports on the first comprehensive study of the laser-plasma coupling mechanisms using Warp+PXR. That study combined state-of-the-art experimental measurements conducted on the UHI100 laser facility at CEA Saclay with cutting-edge 2D and 3D simulations run on the Cori supercomputer at NERSC and the Mira and Theta systems at the Argonne Leadership Computing Facility at Argonne National Laboratory. These simulations enabled the team to better understand the coupling mechanisms between the ultra-intense laser light and the dense plasma it created, providing new insights into how to optimize ultra-compact particle and light sources. Benchmarks with Warp+PXR showed that the code is scalable on up to 400,000 cores on Cori and 800,000 cores on Mira and can speed up the time to solution by as much as three orders of magnitude on problems related to ultra-high-intensity physics experiments.

“We cannot consistently repeat or reproduce what happened in the experiment with 2D simulations – we need 3D for this,” said co-author Henri Vincenti, a scientist in the high-intensity physics group at CEA Saclay. Vincenti led the theoretical/simulation work for the new study and was a Marie Curie postdoctoral fellow at Berkeley Lab in Vay’s group, where he first started working on the new code and solver. “The 3D simulations were also really important to be able to benchmark the accuracy brought by the new code against experiments.”

For the experiment outlined in the Physical Review X paper, the CEA Saclay researchers used a high-power (100TW) femtosecond laser beam at CEA’s UHI100 facility focused on a silica target to create a dense plasma. In addition, two diagnostics – a Lanex scintillating screen and an extreme-ultraviolet spectrometer– were applied to study the laser-plasma interaction during the experiment. The diagnostic tools presented additional challenges when it came to studying time and length scales while the experiment was running, again making the simulations critical to the researchers’ findings.

“Often in this kind of experiment you cannot access the time and length scales involved, especially because in the experiments you have a very intense laser field on your target, so you can’t put any diagnostic close to the target,” said Fabien Quéré, a research scientist who leads the experimental program at CEA and is a co-author of the PRX paper. “In this sort of experiment we are looking at things emitted by the target that is far away – 10, 20 cm – and happening in real time, essentially, while the physics are on the micron or submicron scale and subfemtosecond scale in time. So we need the simulations to decipher what is going on in the experiment.”

“The first-principles simulations we used for this research gave us access to the complex dynamics of the laser field interaction, with the solid target at the level of detail of individual particle orbits, allowing us to better understand what was happening in the experiment,” Vincenti added.

These very large simulations with an ultrahigh precision spectral FFT solver were possible thanks to a paradigm shift introduced in 2013 by Vay and collaborators. In a study published in the Journal of Computational Physics, they observed that, when solving the time-dependent Maxwell’s equations, the standard FFT parallelization method (which is global and requires communications between processors across the entire simulation domain) could be replaced with a domain decomposition with local FFTs and communications limited to neighboring processors. In addition to enabling much more favorable strong and weak scaling across a large number of computer nodes, the new method is also more energy efficient because it reduces communications.

“With standard FFT algorithms you need to do communications across the entire machine,” Vay said. “But the new spectral FFT solver enables savings in both computer time and energy, which is a big deal for the new supercomputing architectures being introduced.”

Other members of the team involved in the latest study and co-authors on the new PRX paper include: Maxence Thévenet, a post-doctoral researcher whose input was also important in helping to explain the experiment’s findings; Guillaume Blaclard, a Berkeley Lab affiliate who is working on his Ph.D. at CEA Saclay and performed many of the simulations that were reported in this work; Pr. Guy Bonnaud, a CEA senior scientist whose input was important in the understanding of simulation results; and CEA scientists Ludovic Chopineau, Adrien Leblanc, Adrien Denoeud, and Philippe Martin who designed and performed the very challenging experiment on UHI100 under the supervision of Fabien Quéré.

NERSC is a DOE Office of Science User Facility. Additional support for this research was provided by the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program.

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.