A TOAST for Next Generation CMB Experiments

Berkeley Lab Cosmology Software Scales Up to 658,784 Knights Landing Cores

September 19, 2017

By Linda Vu

Contact: cscomms@lbl.gov

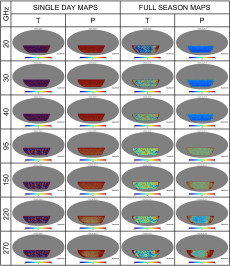

Cumulative daily maps of the sky temperature and polarization at each frequency showing how the atmosphere and noise integrate down over time. The year-long campaign spanned 129 observation-days during which the ACTpol SS patch was available for a 13-hour constant elevation scan. To make these maps, the signal, noise, and atmosphere observations were combined (including percent level detector calibration error), filtered with a 3rd order polynomial, and binned into pixels. (Image Credit: Julian Borrill, Berkeley Lab)

The Cosmic Microwave Background (CMB) is the oldest light ever observed and is a wellspring of information about our cosmic past. This ancient light began its journey across space when the universe was just 380,000 years old. Today it fills the cosmos with microwaves. By parsing its subtle features with telescopes and supercomputers, cosmologists have gained insights about both the properties of our Universe and of fundamental physics.

Despite all that we’ve learned from the CMB so far, there is still much about the universe that remains a mystery. Next-generation experiments like CMB Stage-4 (CMB-S4) will probe this primordial light at even higher sensitivity to learn more about the evolution of space and time and the nature of matter. But before this can happen scientists need to ensure that their data analysis infrastructure will be able to handle the information deluge.

That’s where researchers in Lawrence Berkeley National Laboratory’s (Berkeley Lab’s) Computational Cosmology Center (C3) come in. They recently achieved a critical milestone in preparation for upcoming CMB experiments: scaling their data simulation and reduction framework TOAST (Time Ordered Astrophysics Scalable Tools) to run on all 658,784 Intel Knights Landing (KNL) Xeon Phi processor cores on the National Energy Research Scientific Computing Center’s (NERSC’s) Cori system.

The team also extended TOAST’s capabilities to support ground-based telescope observations, including implementing a module to simulate the noise introduced by looking through the atmosphere, which must then be removed to get a clear picture of the CMB. All of these achievements were made possible with funding from Berkeley’s Laboratory Directed Research and Development (LDRD) program.

“Over the next 10 years, the CMB community is expecting a 1,000-fold increase in the volume of data being gathered and analyzed—better than Moore’s Law scaling, even as we enter an era of energy-constrained computing,” says Julian Borrill, a cosmologist in Berkeley Lab’s Computational Research Division (CRD) and head of C3. “This means that we’ve got to sit at the bleeding edge of computing just to keep up with the data volume.”

TOAST: Balancing Scientific Accessibility and Performance

Cori Supercomputer at NERSC.

To ensure that they are making the most of the latest in computing technology, the C3 team worked closely with staff from NERSC, Intel and Cray to get their TOAST code to run on all of Cori supercomputer’s 658,784 KNL processors. This collaboration is part of the NERSC Exascale Science Applications Program (NESAP), which helps science code teams adapt their software to take advantage of Cori’s manycore architecture and could be a stepping-stone to next generation exascale supercomputers.

“In the CMB community, telescope properties differ greatly, and up until now each group typically had its own approach to processing data. To my knowledge, TOAST is the first attempt to create a tool that is useful for the entire CMB community,” says Ted Kisner, a Computer Systems Engineer in C3 and one of the lead TOAST developers.

“TOAST has a modular design that allows it to adapt to any detector or telescope quite easily,” says Rollin Thomas, a big data architect at NERSC who helped the team scale TOAST on Cori. “So instead of having a lot of different people independently re-inventing the wheel for each new experiment, thanks to C3 there is now a tool that the whole community can embrace.”

According to Kisner, the challenges to building a tool that can be used by the entire CMB community were both technical and sociological. Technically, the framework had to perform well at high concurrency on a variety of systems, including supercomputers, desktop workstations and laptops. It also had to be flexible enough to interface with different data formats and other software tools. Sociologically, parts of the framework that researchers interact with frequently had to be written in a high-level programming language that many scientists are familiar with.

The C3 team achieved a balance between computing performance and accessibility by creating a hybrid application. Parts of the framework are written in C and C++ to ensure that it can run efficiently on supercomputers, but it also includes a layer written in Python, so that researchers can easily manipulate the data and prototype new analysis algorithms.

“Python is a tremendously popular and important programming language, it’s easy to learn and scientists value how productive it makes them. For many scientists and graduate students, this is the only programming language they know,” says Thomas. “By making Python the interface to TOAST, the C3 team essentially opens up HPC resources and experiments to scientists that would otherwise be struggling with big data and not have access to supercomputers. It also helps scientists focus their customization efforts at parts of the code where differences between experiments matter the most, and re-use lower-level algorithms common across all the experiments.”

To ensure that all of TOAST could effectively scale up to 658,784 KNL cores, Thomas and his colleagues at NERSC helped the team launch their software on Cori with Shifter—an open-source, software package developed at NERSC to help supercomputer users easily and securely run software packaged as Linux Containers. Linux container solutions, like Shifter, allow an application to be packaged with its entire software stack including libraries, binaries and scripts as well as defining other run-time parameters like environment variables. This makes it easy for a user to repeatedly and reliably run applications even at large-scales.

“This collaboration is a great example of what NERSC’s NESAP for data program can do for science,” says Thomas. “By fostering collaborations between the C3 team and Intel engineers, we increased their productivity on KNL. Then, we got them to scale up to 658,784 KNL cores with Shifter. This is the biggest Shifter job done for science so far.”

With this recent hero run, the cosmologists also accomplished an important scientific milestone: simulating and mapping 50,000 detectors observing 20 percent of the sky at 7 frequencies for 1 year. That’s the scale of data expected to be collected by the Simons Observatory, which is an important stepping-stone to CMB-S4.

"Collaboration with NERSC is essential for Intel Python engineers – this is unique opportunity for us to scale Python and other tools to hundreds thousands of cores," says Sergey Maidanov, Software Engineering Manager at Intel. "TOAST was among a few applications where multiple tools helped to identify and address performance scaling bottlenecks, from Intel® MKL and Intel® VTune™ Amplifier to Intel® Trace Analyzer and Collector and other tools. Such a collaboration helps us to set the direction for our tools development."

Accounting for the Atmosphere

The telescope's view through one realization of turbulent, wind-blown, atmospheric water vapor. The volume of atmosphere being simulated depended on (a) the scan width and duration and (b) the wind speed and direction, both of which changed every 20 minutes. The entire observation used about 5000 such realizations. (Image Credit: Julian Borrill)

The C3 team originally deployed TOAST at NERSC nearly a decade ago primarily to support data analysis for Planck, a space-based mission that observed the sky for four years with 72 detectors. By contrast, CMB-S4 will scan the sky with a suite of ground-based telescopes, fielding a total of around 500,000 detectors for about five years beginning in the mid 2020s.

In preparation for these ground-based observations, the C3 team recently added an atmospheric simulation module that naturally generates correlated atmospheric noise for all detectors, even detectors on different telescopes in the same location. This approach allows researchers to test new analysis algorithms on much more realistic simulated data.

“As each detector observes the microwave sky through the atmosphere it captures a lot of thermal radiation from water vapor, producing extremely correlated noise fluctuations between the detectors,” says Reijo Keskitalo, a C3 computer systems engineer who led the atmospheric simulation model development.

Keskitalo notes that previous efforts by the CMB community typically simulated the correlated atmospheric noise for each detector separately. The problem with this approach is it can’t scale to the huge numbers of detectors expected for experiments like CMB-S4. But by simulating the common atmosphere observed by all the detectors once, the novel C3 method ensures that the simulations are both tractable and realistic.

“For satellite experiments like Planck, the atmosphere isn’t an issue. But when you are observing the CMB with ground-based telescopes, the atmospheric noise problem is malignant because it doesn’t average out with more detectors. Ultimately, we needed a tool that would simulate something that looks like the atmosphere because you don’t get a realistic idea of experiment performance without it,” says Keskitalo.

“The ability to simulate and reduce the extraordinary data volume with sufficient precision and speed will be absolutely critical to achieving CMB-S4’s science goals,” says Borrill.

In the short term, tens of realizations are needed to develop the mission concept, he adds. In the medium term, hundreds of realizations are required for detailed mission design and the validation and verification of the analysis pipelines. Long term, tens of thousands of realizations will be vital for the Monte Carlo methods used to obtain the final science results.

“CMB-S4 will be a large, distributed collaboration involving at least 4 DOE labs. We will continue to use NERSC – which has supported the CMB community for 20 years now – and, given our requirements, likely need the Argonne Leadership Class Facility (ALCF) systems too. There will inevitably be several generations of HPC architecture over the lifetime of this effort, and our recent work is a stepping stone that allows us to take full advantage of the Xeon Phi based systems currently being deployed at NERSC,” says Borrill.

The work was funded through Berkeley Lab’s LDRD program designed to seed innovative science and new research directions. NERSC and ALCF are both DOE Office of Science User Facilities.

The Office of Science of the U.S. Department of Energy supports Berkeley Lab. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.

More on the history of CMB research at NERSC:

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.