Increase in IO Bandwidth to Enhance Future Understanding of Climate Change

August 6, 2009

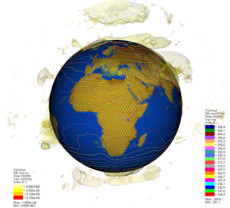

The large data set sizes generated by the GCRM require new analysis and visualization capabilities with parallel processing and rendering capabilities. This 3d plot of the vorticity isosurfaces was developed using the VisIt visualization tool, a general purpose 3D visualization tool with a parallel distributed architecture, which is being extended to support the geodesic grid used by the GCRM. This work was performed in collaboration with Prabhat at NERSC.

Results: Researchers at Pacific Northwest National Laboratory (PNNL)—in collaboration with the National Energy Research Scientific Computing Center (NERSC) located at the Lawrence Berkeley National Laboratory, Argonne National Laboratory, and Cray—recently achieved an effective aggregate IO bandwidth of 5 Gigabytes/sec for writing output from a global atmospheric model to shared files on DOE's "Franklin," a 39,000-processor Cray XT4 supercomputer located at NERSC. The work is part of a Science Application Partnership funded under DOE's SciDAC program.

This is an important milestone in the development of a high-performance Global Cloud Resolving Model (GCRM) code being developed at Colorado State University under a project led by Professor David Randall. This bandwidth number represents the minimum value required to write data fast enough that IO does not constitute a significant overhead to running the GCRM model.

The scientific data was transported between computing centers on the high-performance Energy Sciences Network (ESnet), which connects thousands of researchers at DOE Laboratories and universities across the country with their collaborators worldwide. ESnet comprises two networks—an IP network to carry day-to-day traffic, including e-mails, video conferencing, etc., and a circuit-oriented Science Data Network (SDN) to haul massive scientific datasets. OSCARS allows researchers to reserve bandwidth on the SDN.

Why it matters: Higher performance will allow researchers to run models at higher resolution, and therefore achieve higher accuracy, and will also enable simulations representing longer periods of time. Both are crucial to understanding future climate change. These high IO rates were achieved while still writing to shared files using a data format that is common in the climate modeling community. This will enable many other researchers to make use of this data.

Methods: The increased bandwidth was achieved by consolidating IO on an optimal number of processors, aggregating writes into large chunks of data, and making additional improvements in the file system and parallel IO libraries.

What's next: To date, most of the team's tests of IO have been on the Franklin supercomputer and the Chinook supercomputer at DOE's EMSL, a national scientific user facility at PNNL. The team plans on doing benchmarks on the Jaguar supercomputer, which has a much higher theoretical bandwidth than either the Franklin or Chinook systems. In addition, the team is planning more detailed profiling of the IO to see if additional improvements to the bandwidth can be obtained from the IO libraries.

Acknowledgment: The work was supported by the Department of Energy's SciDAC Program. The project called Community Access to Global Cloud Resolving Model and Data, is led by Karen Schuchardt of PNNL. It is a Scientific Application Partnership (SAP) with The Global Cloud Resolving Model (GCRM), which is also suppoted by the SciDAC program and led by Professor David Randall of Colorado State University. SciDAC SAPs are dedicated to providing efficient, flexible access to logical subsets of this data as well as selected derived products and visualizations, thus enabling a range of analyses by the broader climate research community.

EMSL involvement: Much of the development and debugging of the IO API (application programming interface) was performed on EMSL's Chinook supercomputer as well as initial benchmarking.

Research team: The team includes PNNL's Karen Schuchardt, Annette Koontz, Bruce Palmer, Jeff Daily, and Todd Elsethagen; Prabhat, Mark Howison, Katie Antypas, and John Shalf from NERSC; Rob Latham and Rob Ross from Argonne National Laboratory; and David Knaak from Cray, Inc.

Click here for PNNL article.

Click here for more information about the image.

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.