NERSC’s QCD Library is a Model Resource

February 1, 2004

Five years ago, when the first libraries of lattice QCD data were established at NERSC, the idea of making such information easily accessible to researchers at institutions around the world was a bold new approach. In fact, “The NERSC Archive” format, as it is colloquially known, has become a standard for the lattice storage format, which made exchanging lattices between groups much more efficient. The result is that lattice QCD researchers can avoid the time consuming and computationally intensive process of creating their own lattice data by drawing on the research of others.

Now, the concept is going global. With the appearance of Grid-based technologies, the lattice gauge theory community is developing a new protocol and tools for sharing lattice data across the Grid. The International Lattice Data Federation (http://www.lqcd.org/ildg/wiki/tiki-index.php) has been formed as part of the SciDAC initiative in the U.S. and similar large-scale computing projects in Europe, Japan, and Australia. Sometime this year the original lattice archive at NERSC will join similar mirrors around the world allowing access via Web service. Researchers will be able to query the archive database and examine the resource catalog of all available lattices in the world, then seamlessly transfer this data to their computing resources distributed on the Grid.

Behind all this are hundreds of gigabytes of nothingness, all created by lattice gauge theorists, the particle physicists who model the behavior of quarks. The data, called “lattices,” are snapshots of the way quantum chromodynamic (QCD) fields, which mediate the forces between quarks, fluctuate in a vacuum. Each lattice is a four-dimensional array (283 x 96, say) of four 3 x 3 complex matrices representing these fields in a tiny box of space measuring about 2 femtometers on a side (1 fm = 10-15 m) and extending about 10-22 seconds in time. Lattice gauge theorists generate many such lattices and then use these to compute quantum averages of various physical quantities under investigation.

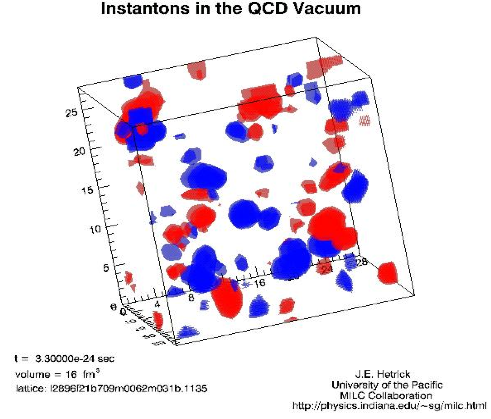

A visualization of a QCD lattice, with red and blue blobs representing instantons and anti-instantons.

Above is a visualization of one of these lattices, showing instantons, fluctuations of the gluon field which are topologically non-trivial and strongly effect the dynamics of the quarks. Red and blue blobs represent instantons and anti-instantons, which twist with opposite topology. In fact, it is believed that much of the mass of the neutron and proton is related to these excitations. The full four-dimensional visualization of these fluctuations can be viewed at http://cliodhna.cop.uop.edu/~het-rick/timeseq1135.mpg as an MPEG movie.

The most time-consuming part of lattice gauge theory is the production of these lattices, which must be done so that the fluctuations are truly representative of the underlying laws of quantum field theory, including, for example, the probability that quarks and anti-quarks pop out of the vacuum and then annihilate each other, producing more gluons. Running on a computer capable of performing several hundred gigaflop/s, this process typically takes from hours to days per lattice. But to complete a study of a particular quantity, several hundred to thousands of lattices must be generated. The bottom line? Creating enough lattices for meaningful research can require teraflop-years of computer time.

Once generated, however, these lattices can be used by many researchers who might each be interested in studying different phenomena. Measuring quantum averages on the ensemble can be done quickly on local clusters or to lattice data stored on HPSS at NERSC. The lat-even workstations. The obstacles to sharing lattices in the past included the size and location of the data. Typically, lattices are generated at a supercomputing center, and access is restricted to those with accounts at the centers. Thus it is impossible for a researcher in France, say, to copy the files to their local account.

In 1998, Jim Hetrick at the University of the Pacific in California, Greg Kilcup at The Ohio State University, and NERSC’s mass storage group developed the first Web-based lattice repository, thereby giving other scientists access tice archive (http://qcd.nersc.gov) allows researchers around the world to browse and download lattices of interest. Hetrick and Kilcup maintainthe archive and contribute the lattices of their research groups and contributions from others.

This archive was the first of its kind in the lattice gauge theory community and quickly became very popular. It has served terabytes of data to researchers around the world who would either not have been able to carry out their investigations, or would have first had to spend many months generating their own lattice data.

For more information, contact Nancy Meyer at NLMeyer@lbl.gov.

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.