Perlmutter

The mural that appears on NERSC's primary high-performance computing system, Perlmutter, pays tribute to Saul Perlmutter and the team he led to the Nobel-Prize-winning discovery of an accelerating universe. (Credits: Zarija Lukic [simulation], Andrew Myer [visualization], and Susan Brand [collage], Berkeley Lab)

Perlmutter is NERSC’s flagship system housed in the center’s facility in Shyh Wang Hall at Berkeley Lab. The system is named in honor of Saul Perlmutter, an astrophysicist at Berkeley Lab who shared the 2011 Nobel Prize in Physics for the ground-shaking discovery that the expansion of the universe is accelerating. The search to explain this acceleration revealed a universe dominated by enigmatic dark energy. Dr. Perlmutter has been a NERSC user for many years, and part of his Nobel Prize-winning work was carried out on NERSC systems.

Perlmutter is designed to meet the emerging simulation, data analytics, and artificial intelligence requirements of the scientific community.

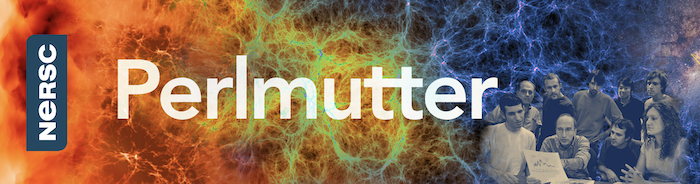

The HPE Cray Shasta system integrates leading-edge technologies with the latest NVIDIA A100 GPUs, AMD “Milan” EPYC CPUs, a novel HPE Slingshot high-speed network, and a 35-petabyte FLASH scratch file system. In total, it is comprised of 3,072 CPU-only and 1,792 GPU-accelerated nodes. More details on the architecture are available on the Perlmutter Architecture documentation webpage.

The programming environment features the NVDIA HPC SDK (Software Development Kit) in addition to the familiar CCE, GNU, and AOCC (AMD Optimizing C/C++ and Fortran Compilers) to support diverse parallel programming models such as MPI, OpenMP, CUDA, and OpenACC for C, C++ and Fortran codes.

Innovations to Support the Diverse Needs of Science

Perlmutter includes a number of innovations designed to meet the diverse computational and data analysis needs of NERSC’s users and to speed their scientific productivity.

The system derives performance from advances in hardware and software, including a new Cray system interconnect, code-named Slingshot. Designed for data-centric computing, Slingshot’s Ethernet compatibility, advanced adaptive routing, first-of-a-kind congestion control, and sophisticated quality of service capabilities improve system utilization and performance, as well as scalability of supercomputing and AI applications and workflows.

The system also features NVIDIA A100 GPUs with Tensor Core technology and direct liquid cooling. Perlmutter is also NERSC’s first supercomputer with an all-flash scratch filesystem. The 35-petabyte Lustre filesystem is capable of moving data at a rate of more than 5 terabytes/sec, making it the fastest storage system of its kind.

Two-Phase Installation

Perlmutter was installed in two phases beginning at the end of 2020. The first phase, consisting of 12 GPU-accelerated cabinets, and 35 petabytes of all-flash storage, was ranked at No. 5 in the Top500 list in November 2021. The second phase completed the system with an additional 12 cabinets of CPU-only nodes in 2022.

Preparation for Perlmutter

In preparation for Perlmutter, NERSC implemented a robust application readiness plan for simulation, data, and learning applications through the NERSC Exascale Science Applications Program (NESAP). Part of the effort yielded recommendations for transitioning applications to Perlmutter so that current users can get up to speed on using the unique features of the new system.

NERSC is the DOE Office of Science’s (SC’s) mission high-performance computing facility, supporting more than 10,000 scientists and 2,000 projects annually. The Perlmutter system represents SC’s ongoing commitment to extreme-scale science, developing new energy sources, improving energy efficiency, discovering new materials, and analyzing massive datasets from scientific experimental facilities.

Building Software and Running Applications

Several HPE-Cray-provided base compilers are available on Perlmutter, with varying levels of support for GPU code generation: HPE Cray, GNU, AOCC, and NVIDIA. All suites provide compilers for C, C++, and Fortran. Additionally, NERSC plans to provide the LLVM compilers there.

Timeline

- November 2020 - July 2021: Cabinets containing GPU compute nodes and service nodes for the Phase 1 system arrived on-site and were configured and tested.

- Summer 2021: When the Phase 1 system installation was completed, NERSC started to add users in several phases, starting with NESAP teams.

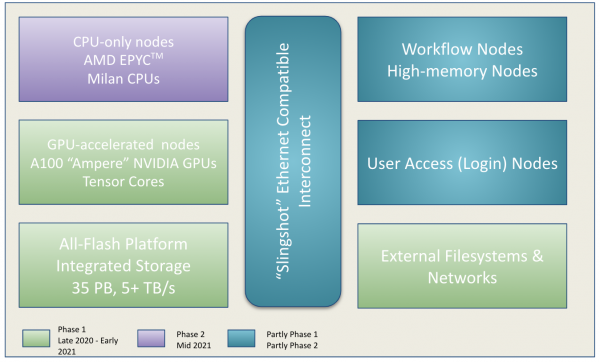

Perlmutter's Phase I cabinets without the doors attached reveal the blue and red lines of its direct liquid cooling system.

- June and November 2021: The Phase 1 system was ranked No. 5 in the Top500 lists in June and November 2021.

- June 2, 2021, and January 5-7, 2022: User trainings were held to teach NERSC users how to build and run jobs on Perlmutter.

- January 19, 2022: The system was made available to all users who want to use GPUs with the start of the allocation year 2022.

- August 16, 2022: Having delivered 4.2 million GPU node hours and 2 million CPU node hours to science applications under its free “early science” phase, it is announced that the system will transition to charged service in September.

Related Links

Technical Documentation

More on Saul Perlmutter and NERSC

- NERSC Played Key Role in Nobel Laureate's Discovery

- NERSC Nobel Lecture Series: Saul Perlmutter Lecture, “Data, Computation, and the Fate of the Universe,” June 11, 2014

- Discovery of Dark Energy Ushered in a New Era in Computational Cosmology

- NERSC and the Fate of the Universe

- Computational Cosmology Comes of Age