‘Sidecars’ Pave the Way for Concurrent Analytics of Large-Scale Simulations

Halo Finder Enhancement Puts Supercomputer Users in the Driver’s Seat

November 2, 2015

Contact: Kathy Kincade, +1 510 495 2124, kkincade@lbl.gov

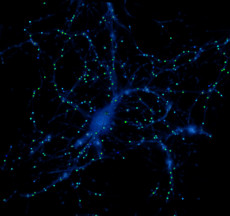

In this Reeber halo finder simulation, the blueish haze is a volume rendering of the density field that Nyx calculates every time step. The light blue and white parts have the highest density. The spheres are the locations of dark matter halos, as calculated by Reeber, that line up along those filaments almost perfectly, which means that Reeber is working correctly because the filaments are the regions with the highest density, which is where we expect to find halos. Image: Brian Friesen

A new software tool developed through a multi-disciplinary collaboration at Lawrence Berkeley National Laboratory (Berkeley Lab) allows researchers doing large-scale simulations at the National Energy Research Scientific Computing Center (NERSC) and other supercomputing facilities to do data analytics and visualizations of their simulations while the simulations are running.

The new capability will give scientists working in cosmology, astrophysics, subsurface flow, combustion research and a variety of other fields a more efficient way to manage and analyze the increasingly large datasets generated by their simulations. It will also help improve their scientific workflows without burning precious additional computing hours.

Visualization and analytics have long been critical components of large-scale scientific computing. The typical workflow involves running each simulation on a predetermined number of supercomputing cores, dumping data to disk for post-processing (including visualization and analytics) and repeating the entire process as needed until the experiment is complete. Often the final output takes up much less disk space than the simulation data needed to create it.

But as extreme-scale computing moves closer to reality, this approach is increasingly unfeasible due to I/O bandwidth constraints and limited disk capacity. As a result, data analysis requirements are outpacing the performance of parallel file systems and the current disk-based data management infrastructure will eventually limit scientific productivity.

One solution to this problem is in situ analysis, where the diagnostics run on the same cores as the simulation and much of the analysis is performed while the data are still in memory. While this approach can reduce I/O costs and allows the analysis routines to work on the data in the same place they were created, it also requires the simulation to wait until the analysis is completed. For some simulations this might be optimal, but if the number of cores needed for analysis is much less than the number needed for the simulation, this ends up being a waste of computational resources.

The challenge of finding the best way to use the available resources to complete both the simulation and the analysis as efficiently as possible prompted a team of mathematicians, cosmologists and data analytics and visualization experts in Berkeley Lab’s Computational Research Division (CRD) to investigate a different approach:in transit analytics. With in transit analytics, for any given simulation the majority of the allotted cores are dedicated to running the simulation, while a handful or more—dubbed “sidecars”—are reserved for doing diagnostics or other jobs that would traditionally be post-processing tasks. Using either in situ or in transit approaches. There’s no need to write the simulation data itself to disk; only the final output needs to be saved.

Cosmology’s Growing Data Challenges

The team is initially testing the in transit approach on Berkeley Lab’s Nyx code, coupled with a new topological halo finder —“Reeber”—developed by Gunther Weber and Dmitriy Morozov, computational researchers in the Lab’s Data Analysis and Visualization Group. Nyx, a large-scale cosmological simulation code developed by researchers in the Center for Computational Sciences and Engineering (CCSE) at Berkeley Lab, is one of the codes based on BoxLib. Because the managing of the sidecar approach is handled by the BoxLib framework rather than by Nyx, all other codes based on BoxLib will be able to take advantage of this same capability with relatively little additional effort. Nyx already had the ability to run the halo finder in situ, so the team will be able to do direct comparisons of the in situ and in transit approaches.

“In transit can be more complicated (than in situ) because you are allocating cores that are doing something different than the other cores, but they are all part of the same computation,” said Almgren, acting group leader of CCSE. “When you submit a job to run one program, it’s actually going to execute two different things: the computation on some of the cores and the analytics on the other cores.”

Cosmology is a good proving ground for in transit analytics because modeling the evolution of the universe over time tends to generate some of the largest datasets in science. A typical Nyx simulation involves hundreds of time steps, each just under 4 TB in size, which means the entire dataset for a single simulation could top out at 400 TB or more—a daunting challenge for both analysis and storage using current supercomputing technologies.

Halo finding has become an invaluable computational tool in studies of the large-scale structure of the universe, a central component of cosmology’s quest to determine the origins and the fate of the universe. In galaxies and galaxy clusters, research has shown that dark matter is distributed in roughly spherical “halos” that surround ordinary, visible, matter.

“Halo finding is one of the most important tasks in post-processing of cosmology simulations,” said Zarija Lukic, a project scientist in the CRD’s Computational Cosmology Center. “A lot of science comes from first identifying what, for example, are some objects you might associate with galaxies in the sky. And these are dense objects, gravitationally bound objects, so it is a very nontrivial task to find them all in a large simulation, and then to obtain their intrinsic properties, like the position, velocity, mass and others.”

One of the challenges of halo finding is that researchers must look at the data over the entire domain to find all the halos and determine their key characteristics. This requires dumping the whole dataset to hard disk and then running the halo finder to analyze it.

“Traditionally we write out the data—say, every 100 time steps—so that we can track these halos,” Lukic explained. “The problem is, the process of writing to disk can take a bunch of time, and then you have these really big files and you have to post-process them, which means reading in the data from disk and processing it to reconstruct the assembly history of a halo.”

Streamlining with ‘Sidecars’

In transit analytics using the sidecar approach is intended to streamline this process. The sidecar cores can function as short-term file memory or as a quick place to transfer data during a simulation, thus eliminating the need to wait until a simulation is done before starting the data analysis.

“With in transit analytics using the sidecars, you don’t have to stop the simulation while the halo finder is running,” said Vince Beckner, a member of the CCSE who designed and supports the BoxLib I/O framework and architected the sidecar technology. “Instead, part of the simulation’s resources are allocated to the sidecars; the computational cores copy the data to the sidecars for analysis, and the computation continues.”

Brian Friesen, a post-doc in the NERSC Exascale Science Applications Program (NESAP) who is optimizing BoxLib for use on NERSC’s next-generation systems,designed the generic BoxLib analysis interface that Nyx uses to communicate with the halo finder. In early testing, he found that running the halo finder with in transit analytics on a smaller problem—a 10243 grid—yielded significant data reductions.

“The size of a plotfile for a 10243 simulation is on the order of 10 GB,” Friesen said. “The size of the halo data—the locations and total masses—is about 10 MB. If you know what diagnostics you want from a simulation, then processing the data on the fly can be very useful.”

Looking ahead, the Nyx/BoxLib team has been selected to participate in NERSC’s new Early Burst Buffer Users Program, where they will be able to further test their code—including the in transit analytics—using the new Burst Buffer feature on the center’s newest supercomputer, Cori. The current implementation of the sidecars shuttles the data via MPI—that is, over the computer’s interconnect. Using the Burst Buffer could be a good alternative.

“We are exploring using the Burst Buffer for in transit analytics to remove the need to write, store and post-process as many plotfiles as are currently needed,” Almgren said. “This will allow fast, temporary storage of the data to be analyzed while the main simulation continues to run, with the only disruption being the time to write to the Burst Buffer.”

In the long run, the team is excited about expanding the in transit capabilities of the sidecar technique and applying it to other research areas.

“In the end, we want to get the most science done,” Almgren said. “In transit may not always be the fastest way to go, but having both the in situ and in transit capabilities in BoxLib enables us to investigate the tradeoffs while running real science applications on the latest machines. It means we can do more science, and that’s the point.”

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.