Early Edison Users Deliver Results

January 31, 2014

Contact: Margie Wylie, mwylie@lbl.gov, +1 510 486 7421

Before any supercomputer is accepted at NERSC, scientists are invited to put the system through its paces during an “early science” phase. While the main aim of this period is to test the new system, many scientists are able to use the time to significantly advance their work. (»Related story: "Edison Electrifies Scientific Computing.")

The Fate of Sequestered CO2

David Trebotich is modeling the effects of sequestering carbon dioxide (CO2) deep underground. The aim is to better understand the physical and chemical interactions between CO2, rocks and the minute, saline-filled pores through which the gas migrates. This information will help scientists understand how much we can rely on geologic sequestration as a means of reducing greenhouse gas emissions, which cause climate change.

The fine detail of this simulation—which shows computed pH on calcite grains at 1 micron resolution—is necessary to better understand what happens when the greenhouse gas carbon dioxide is injected underground rather than being released into the atmosphere to exacerbate climate change. (Image: David Trebotich)

Unlike today’s large-scale models, which are unable to resolve microscale features, Trebotich models the physical and chemical reactions happening at resolutions of hundreds of nanometers to tens of microns. His simulations cover only a tiny area—a tube just a millimeter wide and not even a centimeter long—but in exquisitely fine detail.

“We’re definitely dealing with big data and extreme computing,” said Trebotich. “The code, Chombo-Crunch, generates datasets of one terabyte for just a single, 100 microsecond time-step, and we need to do 16 seconds of that to match a ‘flow-through’ experiment,” he said. Carried out by the Energy Frontier Research Center for Nanoscale Control of Geologic CO2, the experiment captured effluent concentrations due to injecting a solution of dissolved carbon dioxide through a tube of crushed calcite.

Edison’s high memory-per-node architecture means that more of each calculation (and the resulting temporary data) can be stored close to the processors working on it. An early user of Edison, Trebotich was invited to run his codes to help test the machine.The researcher’s simulations are running 2.5 times faster than on Hopper, the previous flagship system, reducing the time it takes him to get a solution from months to just weeks.

“The memory bandwidth makes a huge difference in performance for our code,” Trebotich said. In fact, he expects his code will actually run slower on other supercomputer architectures with many more processors, but less memory per processing core. Trebotich also pointed out that he only needed to make minimal changes to the code to move it from Hopper to Edison.

The aim is to eventually merge such finely detailed modeling results with large-scale simulations for more accurate models. Trebotich is also working on extending his simulations to shale gas extraction using hydraulic fracturing. The code framework could also be used for other energy applications, such as electrochemical transport in batteries.

Our Universe: The Big Picture

Using the Nyx code on Edison, scientists were able to run the largest simulation of its kind (370 million light years on each side) showing neutral hydrogen in the large-scale structure of the universe. The blue webbing in the image represents gas responsible for Lyman-alpha forest signal. The yellow are regions of higher density, where galaxy formation takes place (Image: Casey Stark).

Zarija Lukic works at the other end of the scale. A cosmologist with Berkeley Lab’s Computational Cosmology Center (C3), Lukic models mega-parsecs of space in an attempt to understand the large-scale structure of the universe.

Since we can’t step outside our own universe to see its structure, cosmologists like Lukic examine the light from distant quasars and other bright light sources for clues.

When light from these distant quasars passes through clouds of hydrogen, the gas leaves a distinctive pattern in the light’s spectrum. By studying these patterns (which look like clusters of squiggles called a Lyman alpha forest), scientists can better understand what lies between us and the light source, revealing the process of structure formation in the universe.

Using the Nyx code developed by Berkeley Lab’s Center for Computational Sciences and Engineering, Lukic and colleagues are creating virtual “what-if” universes to help cosmologists fill in the blanks in their observations.

Researchers use a variety of possible cosmological models (something like universal recipes), calculating for each the interplay between dark energy, dark matter, and the baryons that flow into gravity wells to become stars and galaxies. Cosmologists can then compare these virtual universes with real observations.

“The ultimate goal is to find a single physical model that fits not just Lyman alpha forest observations, but all the data we have about the nature of matter and the universe, from cosmic microwave background measurements to results from experiments like the Large Hadron Collider,” Lukic said.

Working with 2 million early hours on Edison, Lukic and collaborators performed one of the largest Lyman alpha forest simulations to date: the equivalent of a cube measuring 370 million light years on each side. “With Nyx on Edison we're able—for the first time—to get to volumes of space large enough and with resolution fine enough to create models that aren’t thrown off by numerical biases,” he said. Lukic and his collaborators are preparing their results for publication. Lukic expects his work on Edison will become “the gold standard for Lyman alpha forest simulations.”

Large-scale simulations such as these will be key to interpreting the data from many upcoming observational missions, including the Dark Energy Spectroscopic Instrument (DESI). The work supports the Dark Universe project, part of the Department of Energy’s Scientific Discovery through Advanced Computing (SciDAC) program.

Growing Graphene and Carbon Nanotubes

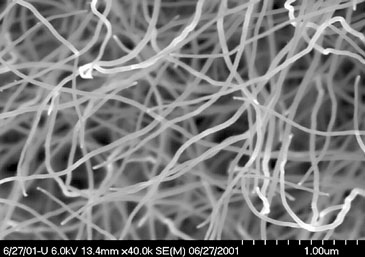

Back down at the atomic-scale, Vasilii Artyukhov, a post-doctoral researcher in materials science and nanoengineering at Rice University, used 5 million processor hours during Edison’s testing phase to simulate how graphene and carbon nanotubes are “grown” on metal substrates using chemical vapor deposition.

This scanning electron microscope image shows carbon nanotubes “grown” by chemical vapor deposition. Rice University researchers are using Edison to understand the process better, which could lead to industrial scale production of the nanomaterial which holds promise for a host of applications, including improved batteries and flexible solar cells. (Image: NASA)

Graphene (one-atom thick sheets of graphite) and carbon nanotubes (essentially, graphene grown in tubes) are considered “wonder materials” because they are light, but strong and electrically conductive, among other properties. These nanomaterials offer the prospects of vehicles that use less energy; thin, flexible solar cells; and more efficient batteries—that is, if they can be produced on an industrial scale.

“We’re trying to come up with some ideas to improve and scale up the growth and quality of graphene,” said Artyukhov. “We especially want to develop a theory for the growth of carbon nanotubes of a strictly needed type,” he said. Today’s methods produce a mish-mash of tubes with different properties. For instance, semiconducting types are useful for electronics and metallic types for high-current conduits. For structural purposes the type is less important than the length. That’s why the group is also investigating how to grow longer tubes on a large scale.

For Artyukhov’s purposes, Edison’s fast connections between processor nodes, the Aries interconnect wired in a “dragonfly” configuration, has allowed him to run his code on twice as many processors, and at speeds twice as fast as before. “Our calculations are very communication intensive. The processors need to exchange a lot of information and that can limit the number of processors we use and thus the speed of our calculations,” he said.

The Rice University researcher is also using molecular dynamics codes, “which aren’t as computationally demanding, but because Edison is so large, you can run many of them concurrently,” he said.

Working with Rice University researcher Boris Yakobson, Artyukhov and his collaborators are preparing two results for publication, based on their early access to Edison. During this testing period, scientists are able to use the system without the time being charged against their allocated hours, which can be especially valuable for early career researchers, such as Artyukhov: “It opens you to trying things that you wouldn’t normally try,” he said.

Better Combustion for New Fuels

This volume rendering shows the reactive hydrogen/air jet in crossflow in a combustion simulation. (Image: Hongfeng Yu, University of Nebraska)

Combustion, whether in automobile engines or power plants, accounts for about 85 percent of all our energy needs, and that’s not expected to change anytime soon. To get greater efficiency and to reduce emissions of pollutants and carbon dioxide, engineers are turning up the pressure in combustion chambers and burning new kinds of fuels. And that’s putting a strain on existing designs.

Jackie Chen and her research team at Sandia National Laboratories are investigating how to improve combustion using new engine designs and fuels such as biodiesel, hydrogen-rich “syngas” from coal gasification and alcohols like ethanol. Her group models the behavior of burning fuels by simulating some of the underlying chemical and mixing conditions found in these combustion engines in simple laboratory configurations, using a direct numerical simulation code developed at Sandia.

During Edison’s early science period, Chen and post-doctoral researchers Hemanth Kolla and Sgouria Lyra modeled hydrogen and oxygen mixing and burning in a transverse jet configuration commonly employed by gas turbine combustors in aircraft engines and industrial power plants. Their simulations are being performed in tandem with experimentalists to understand the complex flow field affected by reaction and how it manifests itself in instabilities that are critical to understand from a device safety and performance perspective.

The team was able to run their direct numerical simulation code, S3D, on 100,000 processors at once: "We observed a four- to five-fold performance improvement over the Cray XE6 (Hopper) on Edison,” Chen said.

"DNS codes are typically memory bandwidth limited and Edison seems to help,” she said. “The fast processors also help with the throughput.”

Her code also generates a lot of data: “It takes billions of grid points to resolve a 3D turbulent flow,” said Chen. "A typical run on Edison may drop one-fifth of a petabyte of raw data," she said. "The high I/O speeds of the lustre file systems were helpful in efficiently generating and analyzing the data at 6-10 GB/s read performance and around 2.5 GB/s write."

"In the end, what really matters isn’t the technical specifications of a system," said Chen. "What really matters is the science you can do with it, and on that count, Edison is off to a promising start."

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.