AI and Machine Learning

AI is transforming science across domains and application areas within the DOE science portfolio, and the computational needs of scientists developing AI applications are growing. In response, NERSC is working across areas of AI system deployment, deep engagement with science teams towards AI-enabled applications, and broad engagement and training of the wider scientific community.

NERSC has driven the emergence of modern AI and deep learning for science in recent years.

Pushing AI scale and performance

Our team built the first deep learning application to run on over 10k nodes with scientific tasks across climate and Large Hadron Collider (LHC) physics (presented at SC17). This was followed by the NERSC-led first exascale deep learning application that won the 2018 Gordon Bell Prize. NERSC has continued its leadership in understanding AI for science performance at scale with recent research presented at SC24.

Deploying a system built for AI

In 2021, NVIDIA described NERSC’s flagship system as the world’s fastest AI supercomputer at the time, and the system was quickly made available for open science. Early applications included the first deep learning model to achieve the skill of numerical weather prediction and novel particle physics publications. Perlmutter continues to be the most productive supercomputer for AI for science, with a wide range of production-level projects, including the 36 selected for our 2024 GenAI call.

Leading first-of-their-kind deep learning applications

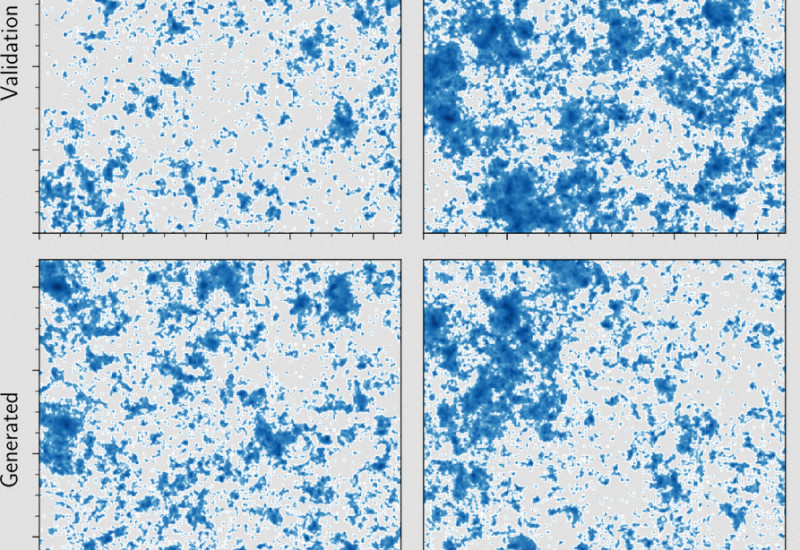

NERSC led several first-of-a-kind deep learning applications, including the first generative deep learning for science (CosmoGAN and CaloGAN) in 2017 and the first self-supervised deep learning (for cosmology applications in 2020 and 2021). Recently, NERSC has been involved in pioneering work to navigate the different needs of foundation models in science in papers at NeurIPS 2023 and PhysRevD.

Empowering the community with tutorials and schools

NERSC has led the Deep Learning at Scale Tutorial at the SC conference since 2018. NERSC has also co-organized Deep Learning for Science schools in person at Berkeley Lab, featuring top academic and industry lecturers. Overall, NERSC tutorials and schools have had thousands of participants, bringing AI expertise to the wider science and HPC world.

Cultivating a rich AI ecosystem

NERSC provides powerful computing systems for science, including our current flagship Perlmutter supercomputer, which is well designed for AI with over 7,000 NVIDIA A100 GPUs.

NERSC also provides a rich software ecosystem for AI, including prebuilt software environments, containers, and fully customizable user environments.

Other relevant offerings for AI users include a JupyterHub service, the Spin platform for user-defined services, and the Superfacility API for interacting with NERSC systems in integrated and automated ways.