NERSC Global Filesystem Played a Key Role in Discovery of the Last Neutrino Mixing Angle

February 7, 2013

By John Hules

Contact: cscomms@lbl.gov

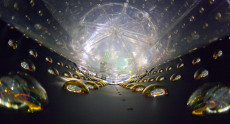

Daya Bay Neutrino Facility in China. Photo by Roy Kaltschmidt, Lawrence Berkeley National Laboratory.

Discovery of the last neutrino mixing angle — one of Science magazine’s top ten breakthroughs of the year 2012 — was announced in March 2012, just a few months after the Daya Bay Neutrino Experiment’s first detectors went online in southeast China. Collaborating scientists from China, the United States, the Czech Republic, and Russia were thrilled that their experiment was producing more data than expected, and that a positive result was available so quickly.

But that result might not have been available so quickly without the NERSC Global Filesystem (NGF) infrastructure, which allowed staff at the U.S. Department of Energy’s (DOE’s) National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory (Berkeley Lab) to rapidly scale up disk and node resources to accommodate the surprisingly large influx of data.

NGF, which NERSC started developing in the early 2000s and deployed in 2006, was designed to make computational science more productive, especially for data-intensive projects like Daya Bay. NGF provides shared access to large-capacity data storage for researchers working on the same project, and it enables access to this data from any NERSC computing system. The end result is that scientists don’t waste time moving large data sets back and forth from one system to another — which used to be the case when each computer had its own file system. NERSC was one of the first supercomputer centers to provide a center-wide file system.

Coping with Success

The Daya Bay experiment had been expected to produce large data sets — after all, the nuclear reactors of the China Guangdong Nuclear Power Group at Daya Bay and nearby Ling Ao produce millions of quadrillions of electron antineutrinos every second. However, neutrinos and antineutrinos are very difficult to detect — they pass through most materials, even our bodies, without leaving a trace. Nevertheless, the Daya Bay physicists expected the eight massive detectors buried in the nearby mountains to produce enough high-quality data to measure, for the first time, Θ13 (pronounced “theta-one-three”) — the last unobserved parameter of neutrino oscillation, and a key to understanding the difference between matter and antimatter.

Craig Tull, head of Berkeley Lab’s Science Software Systems Group and U.S. manager of the Daya Bay experiment’s overall software and computing effort, had been working with NERSC and Energy Sciences Network (ESnet) staff to ensure that data coming from Daya Bay could be processed, analyzed, and shared in real time, providing scientists immediate insight into the quality of the physics data and the performance of the detectors.

The plan was this: Shortly after the experimental data was collected in China, it would travel via the National Science Foundation’s GLORIAD network and DOE’s ESnet to NERSC in Oakland, California. At NERSC the data would be processed automatically on the PDSF and Carver systems, stored on the PDSF file system (a dedicated resource for high energy and nuclear physics), and shared with collaborators around the world via the Daya Bay Offline Data Monitor, a web-based “science gateway” hosted by NERSC. NERSC is the only U.S. site where all of the raw, simulated, and derived Daya Bay data were to be analyzed and archived.

The first six detectors to go online performed beyond expectations. Between December 24, 2011, and February 17, 2012, they recorded tens of thousands of interactions of electron antineutrinos, about 50 to 60 percent more than anticipated, according to Tull — about 350 to 400 gigabytes (GB) of data per day. “There were three things that caught us by surprise,” he says. “One was that we were taking more data than projected. Two was that the initial processing of the data did not reduce the volume as expected. And three was that the ratio of I/O [input/output] operations to CPU operations was much higher for Daya Bay analysis than, say, a simulation on a NERSC computer. The combination of those three things created larger than anticipated I/O needs.”

In short, Tull was worried that the PDSF file system did not have the space and bandwidth to handle all the incoming data. So in January 2012 he met with Jason Hick, leader of NERSC’s Storage Systems Group, to find a solution. “NGF was already running at a petabyte scale,” Hick says, “and Craig's requirements were largely satisfied by simply scaling up the current system. It’s exciting to see NERSC’s vision for NGF realized when we can directly help scientists like Craig achieve their aims.”

Quick Access, Fast Analysis

In less than a week, the Storage Systems team, including Greg Butler and Rei Lee, added more disk space to NGF and updated its configuration, then worked with Tull to reroute the daily Daya Bay data runs to NGF. As a result, processed data was made available to Daya Bay collaborators within two hours after it was collected at the detectors. Only two months later, in March 2012, the Daya Bay Collaboration announced that Θ13 equals 8.8°, outpacing other neutrino experiments in France, South Korea, Japan, and the U.S.

In May 2012, under NERSC’s new Data Intensive Computing Pilot Program, Daya Bay was one of eight projects to receive an award of resources, including disk space, archival storage, and processor hours, to improve data processing performance in anticipation of the experiment going into full production, which occurred in October 2012 when the last two detectors went online. The experiment will continue taking data for another three years to improve the accuracy of the results and to do a variety of analyses.

By December 2012, the project had accumulated half a petabyte of data, and it was time to reprocess all of the data using the latest refinements in software and calibrations, in preparation for a January collaboration meeting in Beijing. Tull consulted with Iwona Sakrejda of NERSC’s Computational Systems group and received a temporary loan of 1,600 cores for processing the data.

“We were predicting that it would take three to four weeks to do the whole analysis,” Tull says, “but with Jason and Iwona’s help, we were able to do it in eight days instead of three to four weeks. It was the combination of NGF and the extra cores which shortened the time to finish production.”

The Daya Bay story is typical of what NERSC expects to see more of in the future: data-intensive science that requires high-bandwidth, high-volume, and low-response-time data systems.

“For the last four years, NERSC has been a net importer of data,” says NERSC Division Director Sudip Dosanjh. “About a petabyte of data is typically transferred to NERSC every month for storage, analysis, and sharing, and monthly I/O for the entire center is in the 2 to 3 petabyte range. In the future, we hope to acquire more resources and personnel so that science teams with the nation’s largest data-intensive challenges can rely on NERSC to the same degree they already do for modeling and simulation, and the entire scientific community can easily access, search, and collaborate on the data stored at NERSC.”

Related stories:

NERSC Contributes to Science Magazine’s Breakthroughs of the Year

Science magazine: Crash Project Opens a Door in Neutrino Physics

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.