Big Data Hits the Beamline

A Data Explosion is Driving a New Era of Computational Science at DOE Light Sources

November 26, 2013

By Jacob Berkowitz for DEIXIS Magazine

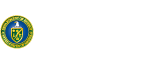

This three-dimensional rendering from computed microtomography data shows matrix cracks and individual fiber breaks in a ceramic matrix composite specimen tested at 1,750 C. Each of numerous ceramic samples is imaged with powerful X-ray scattering techniques over time to track crack propagation and sample damage, producing prodigious amounts of data. Credit: Hrishi Bale and Rob Ritchie, Lawrence Berkeley National Laboratory

When scientists from around the world visit Dula Parkinson’s microtomography beamline at Lawrence Berkeley National Laboratory’s Advanced Light Source, they all want the same thing: amazing, scientifically illuminating, micron-scale X-ray views of matter, whether a fiber-reinforced ceramic composite, an energy-rich shale, or a dinosaur bone fragment.

Unfortunately, many of them have left lately with something else: debilitating data overload.

“They’re dying because of the amount of data I’m giving them,” says Parkinson, a beamline scientist at the Advanced Light Source (ALS). “Often they can’t even open up their whole data sets. They contact me and say ‘Dula, do you have any idea what I can do? I haven’t been able to look at my data yet because they crash my computer.’”

Data sets from light sources, which produce X-rays of varying intensity and wavelengths, aren’t enormous by today’s standards, but they’re quickly getting bigger due to technology improvements. Meanwhile, other fields—astrophysics, genomics, nuclear science and more—are seeing even mightier explosions in information from observations, experimentsand simulations.

If all this knowledge is to benefit the world, scientists must find the insights buried within. They must develop ways to mine mountains of elaborate information in minutes or hours, rather than days or weeks, with less-than-superhuman efforts.

To deal with the challenge, Department of Energy scientists—whether at light sources or particle colliders—are collaborating with computational scientists and mathematicians on data-handling and analysis tools.

“The data volumes are large, but I think even more importantly, the complexity of the data is increasing,” says Chris Jacobsen, a 25-year veteran and associate division director at Argonne National Laboratory’s Advanced Photon Source (APS). “It’s getting less and less effective and efficient to manually examine a data set. We really need to make use of what mathematicians and computational scientists have been learning over the years in a way that we haven’t in the past.”

Big data management, analysis and simulation are driving users to new levels of high-performance computing at the ALS, the APS and three other DOE Office of Science light sources. Researchers are approaching their experiments from a data-intensive computational science perspective.

Those involved say there’s enormous opportunity to stimulate leapfrog advances in light-source science and to create innovative collaborations and a community of computational scientists specialized in such research. (See sidebar at right: Human Connections Tackle Big Data.) “Light sources are an archetype for the new data-intensive computational sciences,” says Craig Tull, leader of Berkeley Lab’s Science Systems Software group.

The Light Source Data Deluge

If there’s a symbol for the big data changes at the nation’s light sources, it’s the external computer drive. Even today, results from many beamline experiments can be loaded onto portable media like a thumb drive, just as it’s been done for decades. Back at their home institutions, researchers can use workstations to process the information from X-rays that ricochet off a protein molecule, for example, and strike detectors.

Light sources have historically operated on this manual grab-and-go data management model, reflecting the nature of synchrotron experiments.

“Synchrotrons are big machines with traditionally bite-sized experiments,” says Jacobsen, at Argonne’s APS.

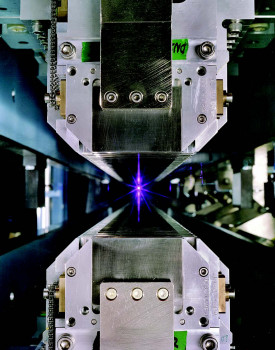

As at all such facilities, the APS’ stadium-sized, circular synchrotron accelerates electrons nearly to light speed, generating a cascade of bright X-ray photons. These photons are tuned and focused to feed 60 simultaneously operating beamlines with wavelengths ideal for resolving matter from the atomic to cellular level.

This view of Lawrence Berkeley National Laboratory’s Advanced Light Source (ALS) looks head-on into the upper and lower rows of magnets in an undulator. The vertical distance between the magnets can be adjusted to determine the wavelength emitted. In this photo, a laser simulates the burst of light produced.

What’s changed: On many DOE light source beamlines, a manageable bite of experimental data has ballooned into daily helpings of terabytes (TB – trillions of bytes). Four primary factors are driving this data volume spike, Parkinson says.

First, light source detectors are collecting images with unprecedented speed. At the APS, a new generation of detectors has turned what used to take 15 minutes of imaging into a 15-second job. Cameras already exist to capture even higher-resolution images in just milliseconds. That means at full use, APS could produce a staggering 100 TB of data a day – a rate comparable to that of the Large Hadron Collider, the giant European physics experiment.

Similarly, data output is doubling every year from the 40 beamlines at Berkeley’s ALS. And Brookhaven National Laboratory’s new National Synchrotron Light Source II (NSLS II, the sixth Office of Science light source) is expected to generate about 15,000 TB of data per year later this decade – not an enormous amount when compared to some other experiments, but a big jump for an X-ray source.

Second, light sources have gotten brighter at a rate even faster than Moore’s Law of accelerating computer power. That means shorter exposure times and more data.

The shining case in point: the Linac Coherent Light Source (LCLS), the world’s most powerful X-ray laser, which came on line at DOE’s SLAC National Accelerator Laboratory in 2009. LCLS is the world’s ultimate fast camera flash, illuminating samples for one-tenth of a trillionth of a second with X-rays a billion times brighter than previous sources.

The combination of faster detectors and greater light power is opening the door to time-resolved experiments, capturing the equivalent not of photos, but video – with the attendant exponential boost in data volumes. Today, detectors running at maximum output can generate a terabyte of data per hour, a study by Berkeley Lab’s Peter Denes shows.

By 2020, that could reach 1,000 terabytes – one petabyte – per hour.

Here’s another example: Not long ago, it was common for a single tomographic X-ray scan, capturing hundreds or thousands of images as the sample rotates, to take an hour. Earlier in 2013, Parkinson says, a group at the ALS captured changes in a sample by collecting similarly sized scans once every three minutes for more than 24 hours, producing terabytes of data. “These are the users that bring the whole data system to its knees,” he adds.

Finally, increasingly automated data management accelerates these factors. For example, Jacobsen is leading an APS pilot project to automate data transfer from the local beamline computer to networked machines, a process that has been done manually.

These big increases in information volume also are compounded, Jacobsen says, by the variety of materials studied and efforts to integrate data from experiments performed at different wavelengths.

With so much complex information to process, he adds, “what we really need is the intelligence about how you look at the data.”

New Era of Light Source Computational Science

With the results they produce rapidly growing in quantity, variety and complexity, light sources are laboratories for developing techniques to gather that intelligence. Users are turning to high-performance computing hardware, software and, perhaps most importantly, computer scientists, launching a new era of data-intensive management, analysis and visualization at the facilities./p>Two initiatives characterize the ways DOE light sources (and other DOE programs) are incorporating computational expertise and data-management capacity, whether on-site or by collaborating with a supercomputing facility – setting the groundwork for what many envision as a combination of both.

First, a Berkeley Lab project links beamline data in real time to some of the nation’s most powerful open-science computers at the lab’s National Energy Research Scientific Computing Center (NERSC), based in Oakland.

“We’re trying to move the typical data-intensive beamline into a world where they can take advantage of leadership-class, high-performance computing abilities,” says Tull, who leads the project. “In the final analysis what we’re trying to do is drive a quantum leap in science productivity.”

The software collaboration, dubbed SPOT Suite, unites computational scientists from NERSC and the lab’s Computational Research Division with ALS beamline scientists and users. They plan to capitalize on NERSC’s computational power to manage, analyze and visualize big data from ALS beamlines.

The initiative leverages DOE’s advanced Energy Sciences Network (ESnet) to transfer data from ALS to NERSC at gigabytes per second.

SPOT Suite users access NERSC resources via a Web portal being built by Department of Energy Computational Science Graduate Fellowship (DOE CSGF) alumnus Jack Deslippe. A beta version went live in April.

With SPOT Suite, scientists using ALS’ microtomography beamline for three-dimensional, time-resolved, micron-resolution images “can see their data being processed, analyzed and presented in visual form while they’re at the beamline,” Tull says. “This is something many of them have never seen before at any light source.”

Second, in anticipation of the big output from the LCLS—adding a data dollop on top of that from the existing Stanford Synchrotron Radiation Lightsource—SLAC installed an on-site shared high-performance computing cluster. This new model of local computer support also will be implemented at Brookhaven’s NSLS II.

“The data volumes and rates for LCLS are unprecedented for a light source,” says Amber Boehnlein, SLAC’s Head of Scientific Computing since 2011. She has a wealth of big data experience, including former responsibility for computing and application support for Fermi National Accelerator Laboratory experiments.

“We provide a robust computing and storage infrastructure common across all instruments at SLAC,” Boehnlein adds, allowing experimenters to do a first-pass analysis on local SLAC resources, rather than waiting until they return to their home institutions.

One key to effective data management, Boehnlein adds, is using these resources for local data reduction and even to cut the information flow before it starts. SLAC computational scientists are collaborating with beamline users on algorithms for what Boehnlein calls “smart data reduction.”

“People will start to develop much more sophisticated algorithms and they’ll be using those during data collection to take better, higher quality data,” she says.

Indeed, in many physics experiments, scientists set an initial filter that weeds out everything but the desired high-energy events, reducing the initial data collected by as much as 99 percent, says Arie Shoshani, who this year marks his 37th anniversary as head of Berkeley Lab’s Scientific Data Management Group.

Shoshani provides the long-ball, bird’s-eye perspective on DOE big data issues as director of the year-old Scalable Data Management, Analysis and Visualization (SDAV) Institute. SDAV’s mission is to apply existing high-performance computing software to new domains experiencing data overload.

The need for large-scale simulations of things like nuclear fusion and climate has been the primary driver in supercomputer development, Shoshani says. Light sources – and some other DOE research facilities – need heavy iron for large-scale simulations less than they need the thinking that goes into high-performance computing, but that doesn’t mean there aren’t lessons to be learned. “Whatever we know from doing large-scale simulations – from data management to visualization and indexing – can be useful now as we start dealing with large-scale experimental data,” Shoshani says.

Earlier this year he joined a discussion of hardware requirements for data processing at the 2013 Big Data and Extreme-Scale Computing meeting, jointly sponsored by DOE and the National Science Foundation. For Parkinson, who runs the microtomography beamline, these high-performance computing tools change the experimental data he collects from a PC-crashing burden into a scientific bonanza.

“The beamline users are really excited about what’s happening,” he says. “Though it’s early days, I think it’s really going to accelerate what they can do and improve what they can do.”

Reprinted with permission from DEIXIS Magazine Annual 2013 (PDF | 5.7MB).

is a publication of the Krell Institute which manages the DOE Computational Science Graduate Fellowship program.

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.