NERSC Continues Tradition of Cosmic Microwave Background Data Analysis with the Planck Cluster

October 30, 2009

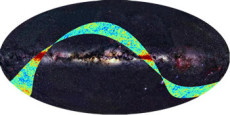

One of Planck's first images is shown as a strip superimposed over a two-dimensional projection of the whole sky as seen in visible light. Image credit: ESA, LFI & HFI Consortia; background optical image: Axel Mellinger

More than 95 percent of our universe is made up of mysteriously "dark" materials—approximately 22 percent of it is comprised of invisible dark matter, while another 73 percent is posited to be dark energy, the force that is accelerating universal expansion.

Armed with a new spacecraft called Planck and supercomputers at the Department of Energy's (DOE) National Energy Research Scientific Computing Center (NERSC), astronomers around the world hope to make tremendous strides toward illuminating the nature and origins of these mystifying materials by creating high-resolution maps of extremely subtle variations in the temperature and polarization of the Cosmic Microwave Background (CMB), which is leftover light from the Big Bang that permeates the universe.

"The CMB is our most valuable resource for understanding fundamental physics and the origins of our universe. The Planck mission will provide the cleanest, deepest and sharpest images of the CMB ever made," says Charles Lawrence, of NASA's Jet Propulsion Laboratory and principal investigator of the Planck Mission in North America.

Equipped with 74 detectors at nine different frequencies, the European Space Agency's Planck observatory will map the entire sky several times in its lifetime, from a perch in space called the second Lagrangian point that is about 1.5 million kilometers (930,000 miles) away from the Earth along a line from the Sun.

NASA has been a significant participant in the Planck program, even initiating the first-ever Interagency Implementation Agreement with DOE to guarantee a minimum annual allocation of NERSC resources to the Planck mission throughout its entire observing lifetime. Additionally, the U.S. Planck team has purchased exceptional levels of service from NERSC, including 32 TB of the NERSC Global Filesystem and technical support for a 256-processor cluster.

The spacecraft officially began taking science observations and funneling data to NERSC on August 13, 2009. NERSC is located at the Lawrence Berkeley National Laboratory's (Berkeley Lab) Oakland Science Facility.

Planck Computing at NERSC

According to Julian Borrill, a staff scientist in the Computational Cosmology Center in Berkeley Lab's Computational Research Division (CRD), the process of understanding data from a CMB mission is long and complicated. Each observation of the sky includes three main components—instrument noise, signals from foreground components like dust and the CMB signal itself—that need to be untangled from one another.

"Because each of these components is correlated, we have to look at the entire dataset at once, and some of these analyses simply cannot be done without well-balanced high performance computing systems," says Borrill, who is also a member of the U.S. Planck Collaboration.

He adds that even if a petascale machine can solve quadrillions of calculations per second, if it cannot move data in and out of the machine sufficiently quickly, then it is not going to work for CMB research.

"We looked at some supercomputers elsewhere and they simply did not have the I/O (input/output) capability that we needed," says NASA's Lawrence. "At NERSC we get a team of experts that knows how to optimize and maintain well-balanced high performance computing systems, as well as the essential advantage of having Planck team members like Julian nearby to test and run the systems, and develop and try out codes."

Realizing that not all aspects of CMB analysis are supercomputing jobs, the U.S. Planck Collaboration also bought in to NERSC's PDSF (Parallel Distributed Systems Facility) cluster collaboration to complement its allocation of supercomputer time. By installing a medium-sized system at NERSC, the team plans to leverage the center's expertise in setting up and maintaining the hardware. The strategy also allows them to take advantage of the NERSC Global File System, which provides a single disk space common to all NERSC machines. This means users do not have to save and transfer data every time they move between machines, which saves time and allows them to be more productive.

"NERSC has the whole package—the systems are well-balanced and suit our analysis needs, the user support is phenomenal, and the center has a long history of working with our community. This is a unique relationship that we don't have with any other computing facility in the world," says Lawrence.

In addition to teasing out instrument noise from the CMB maps, the U.S. team will also be modifying existing codes, as well as developing new methods for analyzing data.

Evolution of CMB Computing at Berkeley Lab's CRD and NERSC

An artist's conception of the Planck (Image: NASA Jet Propulsion Laboratory)

According to Lawrence, preparations for a Planck-like mission began in the U.S. in the late 1990s when NASA was supporting balloon-borne CMB missions like BOOMERANG and MAXIMA, which scanned small patches of the sky to map the minute temperatures variations in the CMB. At the time, both missions were collecting unprecedented volumes of data, and were the first CMB experiments to ever use supercomputing resources for data analysis. They both received allocations to compute on NERSC's 600-processor Cray T3E, called Mcurie, then the fifth fastest supercomputer in the world.

As the CMB community started incorporating supercomputers into their scientific process, they also had to develop new data analysis methods to optimize these emerging resources. That’s when Borrill and his colleagues Andrew Jaffe and Radek Stompor stepped in and developed MADCAP, the Microwave Anisotropy Dataset Computational Analysis Package. The researchers used this software to create the maps and angular power spectra of the BOOMERANG and MAXIMA data. These analyses would eventually confirm that the universe is approximately geometrically flat.

Berkeley Lab has been at the forefront of this research ever since. Borrill now co-leads the Computational Cosmology Center (C3), which is a collaboration between CRD and the Lab's Physics Division to pioneer algorithms and methods for optimizing CMB research on cutting-edge supercomputer technology.

"BOOMERANG and MAXIMA were essentially what got the CMB community to start developing supercomputing methods for analyzing data; the Planck computing methods have grown with the experiment and supercomputer technologies," says Lawrence.

"When NERSC first started allocating supercomputing resources to the CMB research community in 1997, it supported half a dozen users and two experiments. Almost all CMB experiments launched since then have used the center for data analysis in some capacity, and today NERSC supports around 100 researchers from a dozen experiments," says Borrill.

He says that advancements in CMB detectors have led to a trend where the volume of data collected doubles about every 18 months, with upcoming ground-based experiments such as PolarBeaR and QUIET-II set to exceed the Planck data volume by 2 to 3 orders of magnitude. "Coincidentally, this trend mirrors Moore's Law and means that we have to stay on the leading edge of computation just to keep up with CMB data," says Borrill.

For more on the history of Computational Cosmology research at the Berkeley Lab, please visit:

http://www.scidacreview.org/0704/html/cmb.html

http://newscenter.lbl.gov/feature-stories/2008/12/10/cosmic-data/

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.