NERSC's Franklin Supercomputer Upgraded to Double Its Scientific Capability

July 20, 2009

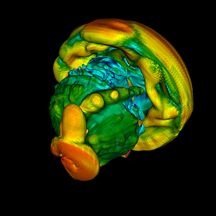

OCEAN EDDIES: This image comes from a computer simulation modeling eddies in the ocean. An interesting feature is the abundance of eddies away from the equator, which is shown in the center of the image at y=0. This research collaboration led by Paola Cessi of the Scripps Institute of Oceanography performed over 15,000 years worth of deep ocean circulation simulations with 1.6 million processor core hours on the upgraded Franklin system.

The Department of Energy's (DOE) National Energy Research Scientific Computing (NERSC) Center has officially accepted a series of upgrades to its Cray XT4 supercomputer, providing the facility's 3,000 users with twice as many processor cores and an expanded file system for scientific research. NERSC's Cray supercomputer is named Franklin in honor of Benjamin Franklin, the United States' pioneering scientist.

“Franklin's upgrade has already provided a tremendous benefit to the DOE computational science community, which now has a system in which the aggregate system performance is double that of the original Franklin system,” says Kathy Yelick, NERSC Division Director. “The key to improving application throughput is to maintain balance for that workload, so when we doubled the number of cores, we also doubled the memory capacity and bandwidth, and tripled the I/O bandwidth.”

In a strategic effort to maintain scientific productivity, the upgrades were implemented in phases. The quad-core processor and memory upgrade was done by partitioning Franklin and performing an upgrade and test on one part while the rest of the system was available to users.

“The phased upgrade was engineered by Cray and NERSC staff specifically for the Franklin upgrade, and is now a model for upgrading future systems,” says Wayne Kugel, Senior Vice President of Operations and Support at Cray.

A later upgrade increased the file system capacity to 460 terabytes, and the speed at which data is moved in and out of the system increased threefold. As a result of these upgrade efforts, the amount of available computing time roughly doubled for scientists studying everything from global climate change to atomic nuclei. The final Franklin system has a theoretical peak performance of 355 teraflop/s, three and half times that of the original system. The increase in peak performance comes from doubling of cores, doubling the number of floating-point operations per clock cycle, and a modest drop in the clock rate.

“Our focus has always been on application performance, and we estimated the doubling of total system performance prior to the upgrade; the growing gap between application performance and peak emphasizes the need to measure and evaluate real application performance,” says Yelick.

“This acceptance is a great achievement for the NERSC facility, and it wouldn't have been possible without a team of diligent staff from NERSC and Cray to quickly perform the hardware upgrades, identify and respond to necessary software changes, and guide our users throughout the process,” she adds.

Improved Franklin Furthers Science

Understanding Global Climate

“We are very pleased with the improvements on Franklin. Since these upgrades were completed, our scientific output has increased by 25 percent — meaning we can make more historical weather maps in significantly less time,” says Gil Compo, climate researcher at the University of Colorado at Boulder CIRES Climate Diagnostics Center and NOAA Earth System Research Laboratory. “NERSC’s excellent computing facilities and phenomenally helpful consulting staff were extremely important to our research.”

A NERSC user since 2007, Compo is leading a project to reconstruct global weather conditions in six-hour intervals from 1871 to the present. Called the 20th Century Reanalysis Project, Compo notes that these weather maps will help researchers forecast weather trends of the next century by assessing how well computational tools used in projections can successfully recreate the conditions of the past.

With awards from DOE's Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program, Compo's team used NERSC systems to recreate the deadly Knickerbocker Storm of 1922 that killed 98 people and injured 133; as well as the 1930s Dust Bowl, which left half a million of people homeless as dust storms rolled over the drought-barren Great Plains of the United States.

Ocean Circulation

Another project led by Paola Cessi of the Scripps Institute of Oceanography performed over 15,000 years worth of deep ocean circulation simulations on Franklin. The project utilized approximately 1.6 million processor core hours. Insights from these experiments will give researchers a better understanding of how oceans circulate and how changes in the atmosphere affect these processes.

Energy from the Sun does not fall equally on Earth. Most sunlight is actually absorbed at the equator, and heat is transported across the globe via oceans. Cessi's project used Franklin to simulate how mesoscale oceanic flows, which are driven by surface winds and differences in solar heating, bring heat from the deep ocean to the surface. Since oceans are the biggest repositories of carbon dioxide on the planet, this research could also provide valuable insights about how oceans cycle greenhouse gases into the atmosphere and across the globe.

“As a result of the Franklin experiments, we have been able to demonstrate that the Southern Ocean exerts remarkable control over the deep stratification and overturning circulation throughout the ocean,” says Christopher Wolfe, a researcher at the Scripps Institute of Oceanography and a member of Cessi's team.

According to Wolfe, most of the abrupt climate change studies to date have focused primarily on how density changes in the North Atlantic oceans affect the strength of the Meridional Overturning Circulation (MOC), which controls the overturning circulation throughout the oceans.

“The results of our simulations indicate that changes in the Southern Ocean forcing could also have a large impact on MOC and, therefore, on global climate,” says Wolfe.

Nuclear Physics

James Vary, a professor of physics at Iowa State University, led a research team that used Franklin to calculate the energy spectrum of the atomic nucleus of oxygen-16, which contains eight protons and eight neutrons. This is the most common isotope of oxygen and it makes up more than 99 percent of the oxygen that humans breathe. He notes that this is currently the most complex nucleus being studied with his group's methods.

"The quad-core upgrade had a significant impact on our ability to push the frontiers of nuclear science. The expanded machine made 40,000 compute cores available for our research, which allowed us to calculate the energy spectrum of a nucleus that would have been previously impossible," says Vary.

According to Vary, physicists currently do not fully understand the fundamental structure of the atomic nucleus, especially the basic forces between the protons and neutrons. A better understanding of these principles could help improve the design and safety of nuclear reactors, as well as provide valuable insights into the life and death of stars.

The current method for understanding the fundamental structure of atomic nuclei involves a lot of back-and-forth between computer simulations and laboratory experiments. Researchers use their theories about how the fundamental structures and forces work to create computer models that will calculate the energy spectrum in the nucleus of a particular atom. The results of these calculations are then compared with laboratory experiments to identify any discrepancies. The inconsistencies between the computer calculations and lab experiments give researchers valuable insights about how to refine their theories. The heavier the atom, or the more protons and neutrons it has, the more complex its fundamental structure and interactions. The ultimate goal in physics is to precisely predict how protons and neutrons interact in extremely complex systems.

"The calculations that we did on Franklin gave us very valuable information about how to refine our theories and methods, and tell us that we have a long way to go before we can begin to understand extremely complex systems like the nucleus of a Sodium atom," says Vary. His research on Franklin was conducted with an award from the Energy Research Computing Allocations Process (ERCAP).

Scientific Visualization

A SUPERNOVA'S VOLUME: This volume rendering of supernova simulation data was generated by running the VisIt application on 32,000 processors on Franklin, a Cray XT4 supercomputer at NERSC.

As computational scientists are confronted with increasingly massive datasets from supercomputing simulations and experiments, one of the biggest challenges is having the right tools to gain scientific insight from the data. A team of DOE researchers recently ran a series of experiments on some of the world's most powerful supercomputers and determined that VisIt, a leading scientific visualization application, is up to the challenge.

The team ran VisIt on 32,000 processor cores on the expanded Franklin system and tackled datasets with as many as 2 trillion zones, or grid points. The data was loaded in parallel, with the application performing two common visualization tasks —isosurfacing and volume rendering — and producing an image.

“These results are the largest-ever problem sizes and the largest degree of concurrency ever attempted within the DOE visualization research community. They show that visualization research and development efforts have produced technology that is today capable of ingesting and processing tomorrow's datasets,” says E. Wes Bethel of the Lawrence Berkeley National Laboratory (Berkeley Lab). He is a co-leader of the Visualization and Analytics Center for Enabling Technologies (VACET), which is part of DOE's SciDAC program.

“NERSC plays a valuable role in providing facilities for conducting computer and computational science research, which includes experiments like the one we did,” adds Bethel.

About NERSC and Berkeley Lab

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists at national laboratories and universities researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines. Berkeley Lab is a DOE national laboratory located in Berkeley, California. It conducts unclassified scientific research and is managed by the University of California for the U.S. Department of Energy. »Learn more about computing sciences at Berkeley Lab.